4 Mixed effects models

My thanks in particular to Dave Hodgson, who’s fantastic Master’s course offered up the prototype for this workshop.

Slides can be downloaded from:

Firstly, install if necessary (using install.packages(); uncomment the code below if required) and load the lme4 package:

## load libraries

library(tidyverse)

library(lme4)Note: In this session I will use

tidyverseas the principal way to generate plots etc. because it’s far easier for many of the examples in this workshop. The base R code is provided for those of you that are not familiar withtidyverse.

This practical will focus on how to analyse data when the experimental design (or the surveyed explanatory variables) obliges us to study non-independent experimental units. You will find yourself distinguishing between random effects and fixed effects. You will find yourself frustrated at the lack of consensus regarding how to simplify fixed factors in mixed models. It takes a long time to understand how to deal with blocks (and other random effects): don’t expect understanding to come overnight, and do rely on books and websites to help you. But be warned, at this level of statistical prowess, much of the literature is written in Greek symbols and matrix algebra.

Health Warning: some of these mixed-effects modelling packages are quickly evolving, and future updates might play some havoc with the coding, and outputs from, the scripts written here. You will need to keep your eye on the forums in the future if this happens. As always, let me or your demonstrators know if you encounter difficulties.

If this workshop has whetted your appetite, then a range of nice examples can be found at Julian Faraway’s site: http://www.maths.bath.ac.uk/~jjf23/mixchange/index.html. These examples are discussed in his book: Extending the Linear Model with R.

4.1 A note on variable selection and model simplification

By design we have been a bit wary in focusing on the eponymous null hypothesis significance testing (NHST) ideas (and associated idolisation of p-values). We have done this because we want to emphasise that a statistical model is more than just a p-value, and also that a p-value on its own (without some measure of effect size) is meaningless. We appreciate that this might fly in the face of the way that you might have seen statistical models presented in courses or papers, and this section is our attempt to explain to you some of the controversies surrounding these approaches.

There are many philosophical complexities with the way that p-values are used in many papers, and there have been many papers published discussing their misuse and misinterpretations. A good one is Halsey et al. (2015).

This is not to say that null hypothesis significance testing (hereafter NHST) is wrong, it’s just that it’s easy to misuse, even for experienced data modellers. Here are some common challenges / misconceptions:

- A p-value is the “probability of observing a test statistic equal to or more extreme than the observed test statistic if the null hypothesis is true”; it is not the “probability that the null hypothesis is false”.

- Leads to a binary interpretation: “is there an effect?”“, rather than, “what is the size of the effect”?

- They are heavily dependent on sample size:

- a larger study with the same effect size and variance will be more statistically significant. Example: study is exploring differences between sample means from two groups, \(\bar{x}\) and \(\bar{y}\).

- \(~~\bar{x} = 10\), \(\bar{y} = 12\), \(s = 5\) (pooled SD), \(n = 5\) (per group): \(\mathbf{p = 0.4}\).

- \(~~\bar{x} = 10\), \(\bar{y} = 12\), \(s = 5\) (pooled SD), \(n = 30\) (per group): \(\mathbf{p = 0.0332}\).

- a larger study with the same effect size and variance will be more statistically significant. Example: study is exploring differences between sample means from two groups, \(\bar{x}\) and \(\bar{y}\).

- The p-value itself is a random variable, and can have a very wide variance

- Geoff Cumming’s ‘dance of the p-values’.

- Type I, II, S and M errors.

- Often, the null hypothesis of a zero effect is not a biologically valid one.

- Direction and magnitude of effects are important.

- Consider a large study which finds a small effect size of \(x\) (\(p < 0.001\)), with the minimum biologically significant effect size being larger than \(x\).

- Therefore, the highly (statistically) significant p-value provides strong evidence of a lack-of-effect. It corresponds to a negligible real-world effect estimated with high precision!

Many of these problems go away when a study is appropriately powered, with careful experimental design. In this case the sample size is large enough to have high power for the comparison of interest, and the study is often set up in such a way as to make the comparison of interest meaningful. Note that these kinds of study were what NHST were derived to model.

That being said, there are times when NHST can be useful, particularly if you have a complex system (perhaps with large numbers of explanatory variables), and you wish to produce a more parsimonious model (perhaps because it is easier to interpret).

4.1.1 Common NHST approaches to model simplification

Traditionally, if the response variable is Gaussian (normal), then you may have come across two frequently used approaches:

- F-tests: based on comparing the residual mean squared error with the regression mean squared error, or

- Likelihood ratio tests (LRT): based on comparing the model deviance between two models.

Both of these cases are exact tests for linear regression with Gaussian errors (but for mixed models these become approximate). Let’s have a look at a simple example using the fruitflies data from earlier practicals. These data are available in the “fruitfly.rds” file.

ff <- readRDS("fruitfly.rds")ff_lm <- lm(longevity ~ type, data = ff)

summary(ff_lm)##

## Call:

## lm(formula = longevity ~ type, data = ff)

##

## Residuals:

## Min 1Q Median 3Q Max

## -31.74 -13.74 0.26 11.44 33.26

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 63.560 3.158 20.130 < 2e-16 ***

## typeInseminated 0.520 3.867 0.134 0.893

## typeVirgin -15.820 3.867 -4.091 7.75e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 15.79 on 122 degrees of freedom

## Multiple R-squared: 0.2051, Adjusted R-squared: 0.1921

## F-statistic: 15.74 on 2 and 122 DF, p-value: 8.305e-07anova(ff_lm, test = "F")## Analysis of Variance Table

##

## Response: longevity

## Df Sum Sq Mean Sq F value Pr(>F)

## type 2 7845.3 3922.7 15.738 8.305e-07 ***

## Residuals 122 30407.5 249.2

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Here the anova() function performs an F-test for the longevity ~ type model vs. the null model (longevity ~ 1). The idea is that if the fit from the competing models is the same, then the ratio of mean squared errors will follow an F-distribution on 2 and 122 degrees-of-freedom (d.f.) in this case.

Here we obtain a test statistic of 15.738, which can be compared against an F-distribution on 2 and 122 d.f., which gives a p-value of \(8.3 \times 10^{-7}\) (i.e. a statistically significant change even at the 1% and 0.1% levels). Thus we would conclude that dropping type produces a statistically significantly inferior model fit compared to when it is left in the model.

We can do the same thing but using a likelihood ratio test as:

anova(ff_lm, test = "Chisq")## Analysis of Variance Table

##

## Response: longevity

## Df Sum Sq Mean Sq F value Pr(>F)

## type 2 7845.3 3922.7 15.738 8.305e-07 ***

## Residuals 122 30407.5 249.2

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Here the test statistic produces a p-value of \(5.9 \times 10^{-7}\); again highly statistically significant even at the 0.1% level, but slightly different to the F-test (because they are different tests). Here we are comparing \(-2 \times\) the difference in log-likelihoods between the competing models, which under the null hypothesis that the fits are similar, should follow a chi-squared distribution on 2 d.f. here (the d.f. is the difference in the number of parameters between the two nested models). In general, in linear regression with Gaussian errors the F-test is slightly more powerful than the LRT, but you can use either.

For Generalised Linear Models (GLMs) with non-Gaussian error structure the F-test is no longer valid, and the LRT is approximate (the latter is in fact asymptotically chi-squared, which means that the approximation gets better for larger sample sizes, but can be misleading in small samples).

If using F-tests or LRTs to compare models, then the models must be nested (i.e. you can get one model to equal the other by fixing some of the parameters—usually setting coefficients to be zero, as in the fruitflies example). An alternative is to use something like Akaike’s Information Criterion (AIC), which does not assess statistical significance and does not require the models to be nested (it is in essence a measure of predictive accuracy).

For mixed models things get trickier again, and there is much debate about optimal ways to perform model simplification and inference—see GLMM FAQ for more discussion. A nice description of the types of approaches we can use in different cases can be found at:

https://rdrr.io/cran/lme4/man/pvalues.html

A useful quote from the GLMM FAQ is:

“For special cases that correspond to classical experimental designs (i.e. balanced designs that are nested, split-plot, randomized block, etc.) … we can show that the null distributions of particular ratios of sums of squares follow an F distribution with known numerator and denominator degrees of freedom (and hence the sampling distributions of particular contrasts are t-distributed with known df). In more complicated situations (unbalanced, GLMMs, crossed random effects, models with temporal or spatial correlation, etc.) it is not in general clear that the null distribution of the computed ratio of sums of squares is really an F distribution, for any choice of denominator degrees of freedom.”

The developers of lme4 recommend (from worst to best)9:

- Wald Z-tests;

- For balanced, nested LMMs where degrees of freedom can be computed according to classical rules: Wald t-tests;

- Likelihood ratio test, either by setting up the model so that the parameter can be isolated/dropped (via

anova()ordrop1(), or via computing likelihood profiles; - Markov chain Monte Carlo (MCMC) or parametric bootstrap confidence intervals.

If I do perform model simplification or variable selection, even then I would present the final model results in terms of effect sizes and confidence intervals where possible (or via predictive plots), since although CIs suffer with some of the same problems as p-values, at least they focus on the magnitude of the effects. If I have large enough sample sizes and not too many variables, then it may well be fine just to fit one model and perform inference from that.

In this workshop we will introduce some common scenarios in which mixed models can be applied, and give examples of model simplification and inference in these cases. We will tend to use LRTs and profile confidence intervals simply because they are easier to compute. A section at the end will explore the use of parametric bootstrapping for those of you who are interested.

4.2 Non-independent data: Randomised Complete Block Design

Often you will find it impossible to allocate treatments to experimental units at random because they are structured in time or in space. This means that units are not independent of each other, hence their residuals will not be independent, and we have violated an important assumption of generalised linear modelling (and, indeed, of analysis of variance). However the recognition of natural structuring in our population of experimental units can be very useful. If the experimental design can be stratified so that all units that share a common ‘trait’ (e.g. share a corner of a field, or share a single incubator) can represent one or more full replicate of the proposed experiment, then we should use this natural structuring to absorb some of our enemy: noise (also called residual deviance).

An extreme case of using error-absorbers is the Randomised Complete Block Experimental Design. So-called because it includes blocks, each block contains a single replicate of the experiment, and experimental units within blocks are allocated to treatment combinations at random. The concept is best explained using an example.

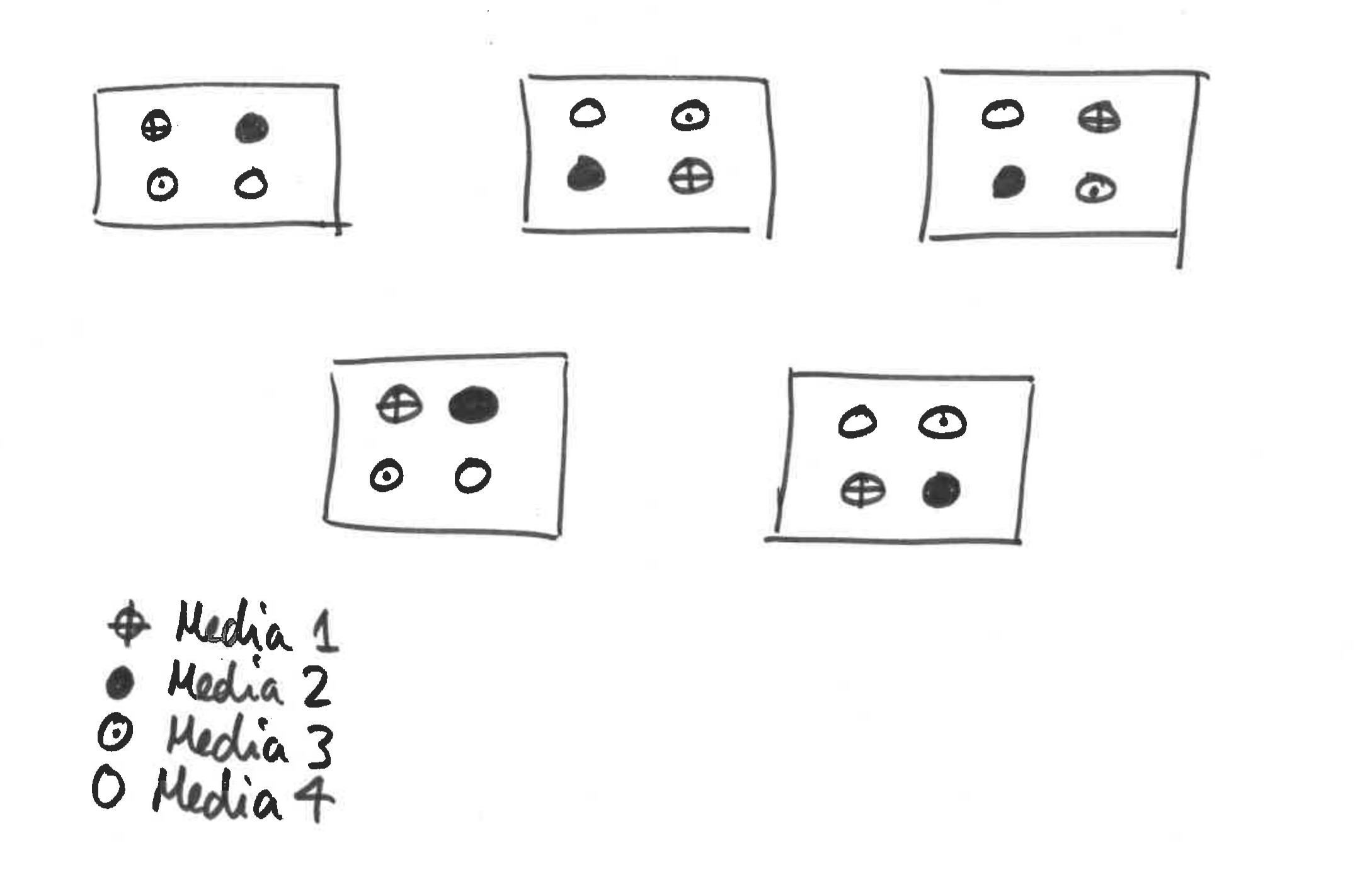

A microbiologist wishes to know which of four growth media is best for rearing large populations of anthrax, quickly. However, this poorly funded scientist does not own a large enough incubator in which to grow lots of replicate populations. Instead he requests space in five different incubators owned by other, better-funded researchers. Each incubator just has space for four bottles of medium. Our scientist allocates each growth medium to one bottle per incubator at random, inoculates with anthrax then monitors population growth rate. A schematic is given in Figure 4.1.

Figure 4.1: Schematic for bacterial growth example

These data are available in the “bacCabinets.rds” file. Read in this dataset and familiarise yourself with its structure.

bac <- readRDS("bacCabinets.rds")Let’s do the analysis wrongly to begin with:

bac_lm <- lm(growth ~ media, data = bac)

drop1(bac_lm, test = "F")## Single term deletions

##

## Model:

## growth ~ media

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 152.38 48.613

## media 3 88.577 240.96 51.778 3.1002 0.05638 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Let’s refit the model with a cabinet effect, and then test for the statistical significance of media:

bac_lm <- lm(growth ~ media + cabinet, data = bac)

drop1(bac_lm, test = "F")## Single term deletions

##

## Model:

## growth ~ media + cabinet

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 15.23 10.551

## media 3 88.577 103.81 42.936 23.264 2.734e-05 ***

## cabinet 4 137.150 152.38 48.613 27.016 6.380e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is better. We need a cabinet effect here to deal with the fact that measurements within cabinets are not independent. Notice that the sum-of-squares for media is the same in this model compared to the one without cabinet in it. This is because we have a balanced design (i.e. the same number of measurements in each combination of explanatory variables). However, the F-statistic is different, and this is because this depends on the residual sum-of-squares, which is the second model is different due to the inclusion of cabinet, which soaks up some of the residual variance that can be attributed to differences between cabinets.

Note: here the

cabineteffect must be included in the model. It does not make sense for us to drop thecabineteffect and test for whether it should be included or not. It needs to be included by design! Hence we can ignore thecabinetline output bydrop1().

media and cabinet. What happens and why?

4.2.1 Mixed model approach

Let’s re-do this analysis using a mixed model. If you haven’t already, you will need to load the lme4 package:

library(lme4)Fixed and random effects:

mediais a fixed effect—we chose the media to be tested, each media has a specific identity, we want to estimate the differences in bacterial growth between different media;cabinetis a random effect—we don’t care about the identity of each cabinet, each cabinet is sampled from a population of possible cabinets, we just want to predict and absorb the variance in bacterial growth rate explained by cabinet.

Now on with the analysis:

bac_lmer <- lmer(growth ~ media + (1 | cabinet), data = bac)

summary(bac_lmer)## Linear mixed model fit by REML ['lmerMod']

## Formula: growth ~ media + (1 | cabinet)

## Data: bac

##

## REML criterion at convergence: 68.8

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -1.2306 -0.5407 0.1088 0.4320 1.7696

##

## Random effects:

## Groups Name Variance Std.Dev.

## cabinet (Intercept) 8.255 2.873

## Residual 1.269 1.127

## Number of obs: 20, groups: cabinet, 5

##

## Fixed effects:

## Estimate Std. Error t value

## (Intercept) 5.5800 1.3801 4.043

## media2 1.7800 0.7125 2.498

## media3 -1.9600 0.7125 -2.751

## media4 -3.8400 0.7125 -5.389

##

## Correlation of Fixed Effects:

## (Intr) media2 media3

## media2 -0.258

## media3 -0.258 0.500

## media4 -0.258 0.500 0.500Syntax for

lmer: Thelmercommand has two important sections:

- The first part corresponds to the ‘fixed effects’ part of the model (in this case,

growth ~ media), which is identical to the model you would enter intoglmif there were no problems of non-independence of data.- The second part is where you enter the ‘random effects’ part of the model, where you tell R how the data is nested and where the non-independence lies. There is a whole world of possibilities here, from spatially autocorrelated variance/covariance matrices to independently varying random slopes and intercepts. We keep it simple in this course. For this example, we assume no interaction between media and cabinet, hence we just want to predict and absorb the additive variance due to different cabinets. Hence our random effect term is

(1 | cabinet). This means: ‘the intercept of our linear model will vary according to cabinet’.

Mathematically this corresponds to: \[

Y_i = \beta_0 + \beta_1 x_{i1} + \beta_2 x_{i2}+ \beta_3 x_{i3} + \gamma_{C_i} + \epsilon_i

\] where \(\epsilon_i \sim N(0, \sigma^2)\), the \(x_{i*}\) are dummy binary variables corresponding to each level of media. For example: \[

x_{i1} = \left\{ \begin{array}{ll}

1 & \mbox{if measurement $i$ has media = 2}\\

0 & \mbox{otherwise},

\end{array}

\right.

\] and similarly for \(x_{i2}\) and \(x_{i3}\). Here \(\gamma_{C_i}\) is the random effect term for each cabinet (\(C_i = 1, \dots, 5\)). The reason why \(\gamma_{C_i}\) are denoted as random effects, is that we assume they come from some distribution such that \(\gamma_{j} \sim N(0, \sigma^2_{\gamma})\) (for \(j = 1, \dots, 5\)). I promise this will be the last equation in these notes!

4.2.1.1 REML vs. ML

To fit these models we use an approach called maximum likelihood (ML). ML is a very general technique with desirable asymptotic properties. However, ML estimators can be biased, particularly for small sample sizes. In mixed models an alternative approach, known as restricted maximum likelihood (REML), can be used, which produces better estimates of the model coefficients, particularly for small sample sizes. However, the REML likelihood function is different to the ML likelihood function, and only makes sense for mixed models (since it’s designed to estimate the variance components).

We may have to switch between these two approaches depending on what we want to do. In general, if you want to compare nested models that only differ in the random effects, then you can use either REML or ML. However, if you want to compare models that differ in their fixed effects, then for balanced, nested designs we can usually derive suitable tests based on REML fits. For unbalanced or non-nested designs you will need to use ML. Hence, if we are performing variable selection or model simplification, then we may have to switch to ML when comparing models, but then refit the model using REML in order to produce estimates of the coefficients.

Note: for various reasons the authors of

lme4do not provide p-values as standard from a call tosummary()oranova(). We can perform a likelihood ratio test for the impact of droppingmediafrom the fitted model. The simplest way to do this is to use thedrop1()function:Since this is a balanced design, then we can use either REML or ML to generate appropriate likelihood ratio tests for assessing the impact of dropping fixed effects. In an unbalanced design, then we would need to refit the model using ML. The easiest way to do this is in situ, using the

update()function and setting the argumentREML = F. This does not change the originalbac_lmermodel object.Trying both approaches here should give the same result.

drop1(bac_lmer, test = "Chisq")## Single term deletions

##

## Model:

## growth ~ media + (1 | cabinet)

## Df AIC LRT Pr(Chi)

## <none> 85.544

## media 3 108.333 28.789 2.48e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1drop1(update(bac_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## growth ~ media + (1 | cabinet)

## Df AIC LRT Pr(Chi)

## <none> 85.544

## media 3 108.333 28.789 2.48e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We have used a likelihood ratio test10 to assess whether the removal of media corresponds to a statistically significantly inferior model fit, which in this case it does at both the 5% and 1% levels. Hence we should leave media in the model. Once we have the final model, we can look at a summary of the model fit:

summary(bac_lmer)## Linear mixed model fit by REML ['lmerMod']

## Formula: growth ~ media + (1 | cabinet)

## Data: bac

##

## REML criterion at convergence: 68.8

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -1.2306 -0.5407 0.1088 0.4320 1.7696

##

## Random effects:

## Groups Name Variance Std.Dev.

## cabinet (Intercept) 8.255 2.873

## Residual 1.269 1.127

## Number of obs: 20, groups: cabinet, 5

##

## Fixed effects:

## Estimate Std. Error t value

## (Intercept) 5.5800 1.3801 4.043

## media2 1.7800 0.7125 2.498

## media3 -1.9600 0.7125 -2.751

## media4 -3.8400 0.7125 -5.389

##

## Correlation of Fixed Effects:

## (Intr) media2 media3

## media2 -0.258

## media3 -0.258 0.500

## media4 -0.258 0.500 0.500Note that this summary has been produced from the model fit using REML, even though the last LRT was conducted using the model fitted using ML—this is because we updated the model in situ within drop1().

The ‘intercept’ of the lmer model is the mean growth rate in media1 for an average cabinet. lmer output also gives you information criteria about the model, tells you the standard deviation of the random effects, correlations between levels of fixed effects, and so on.

Note: we use a likelihood ratio test (LRT) here, rather than an F-test. The F-test would be OK in this particular case, with Gaussian error structure and fully balanced, nested design. However, LRTs are more general, and thus for consistency I will tend to use these (or AIC) throughout where required.

We can derive confidence intervals using confint(), and also produce predictions from the model using predict().

The abrasion data in the faraway package contains information on an experiment where four materials were fed into a wear testing machine and the amount of wear recorded. Four samples could be processed at the same time and the position of these samples may be important, but we really care about assessing wear rates between different materials. Multiple runs were performed. You can load the package and data as follows:

library(faraway)

data(abrasion)?abrasion). Fit an appropriate linear mixed model to these data and assess whether there is any statistical evidence for differences in wear rates between the different materials.

4.3 Non-independent data: pseudoreplication, nested variance and derived variable analysis

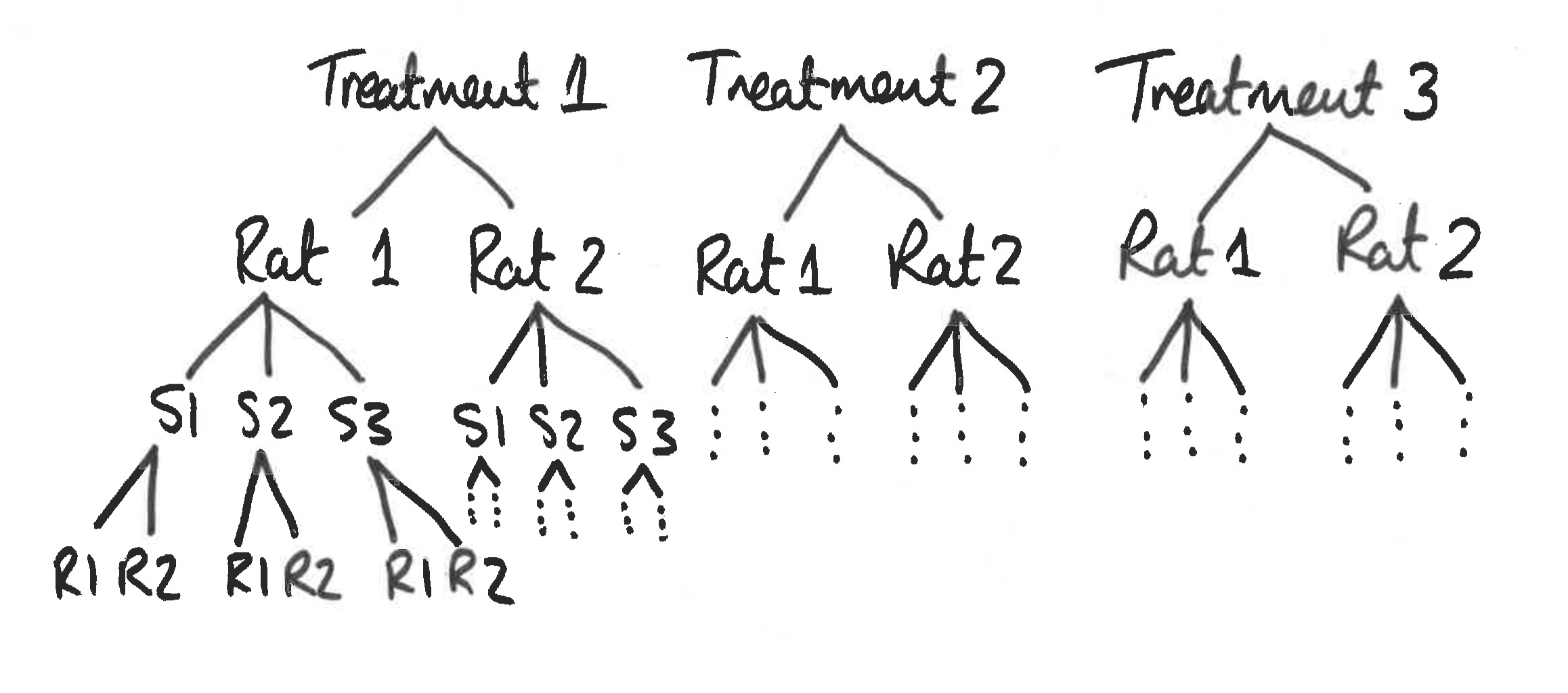

We take an example from Sokal & Rohlf (1981). The experiment involved a simple one-way anova with 3 treatments given to 6 rats. The analysis was complicated by the fact that three preparations were taken from the liver of each rat, and two readings of glycogen content were taken from each preparation. This generated 6 pseudoreplicates per rat to give a total of 36 readings in all. A schematic is given in Figure 4.2.

Figure 4.2: Schematic for glycogen in rats example

Clearly, it would be a mistake to analyse these data as if they were a straightforward one-way anova, because that would give us 33 degrees of freedom for error. In fact, since there are only two rats in each treatment, we have only one degree of freedom per treatment, giving a total of 3 d.f. for error.

The variance is likely to be different at each level of this nested analysis because:

- the readings differ because of variation in the glycogen detection method within each liver sample (measurement error);

- the pieces of liver may differ because of heterogeneity in the distribution of glycogen within the liver of a single rat;

- the rats will differ from one another in their glycogen levels because of sex, age, size, genotype, etc.;

- rats allocated different experimental treatments may differ as a result of the fixed effects of treatment.

If all we want to test is whether the experimental treatments have affected the glycogen levels, then we are not interested in liver bits within rat’s livers, or in preparations within liver bits. We could combine all the pseudoreplicates together, and analyse the 6 averages. This would have the virtue of showing what a tiny experiment this really was. This latter approach also ignores the nested sources of uncertainties. Instead we will use a linear mixed model.

The new concept here is that there are multiple random effects that are nested. These data are available in the “rats.rds” file. First, read in the data:

rats <- readRDS("rats.rds")

summary(rats)## Glycogen Treatment Rat Liver

## Min. :125.0 1:12 1:18 1:12

## 1st Qu.:135.8 2:12 2:18 2:12

## Median :141.0 3:12 3:12

## Mean :142.2

## 3rd Qu.:150.0

## Max. :162.04.3.1 The Wrong Analysis

Here is what not to do (try it anyway)!

rats_lm <- lm(Glycogen ~ Treatment * Rat * Liver, data = rats)The model has been specified as if it were a full factorial with no nesting and no pseudoreplication. Note that the structure of the data allows this mistake to be made. It is a very common problem with data frames that include pseudoreplication.

An ANOVA table gives:

anova(rats_lm, test = "F")## Analysis of Variance Table

##

## Response: Glycogen

## Df Sum Sq Mean Sq F value Pr(>F)

## Treatment 2 1557.56 778.78 36.7927 4.375e-07 ***

## Rat 1 413.44 413.44 19.5328 0.0003308 ***

## Liver 2 113.56 56.78 2.6824 0.0955848 .

## Treatment:Rat 2 384.22 192.11 9.0761 0.0018803 **

## Treatment:Liver 4 328.11 82.03 3.8753 0.0192714 *

## Rat:Liver 2 50.89 25.44 1.2021 0.3235761

## Treatment:Rat:Liver 4 101.44 25.36 1.1982 0.3455924

## Residuals 18 381.00 21.17

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Note: I can only use the

anova()function in this way because the data are balanced (or at least the model formulation above with no nesting and no pseudoreplication ends up treating the data as if they were balanced). In general you have to be very careful to useanova()in this way.

This says that there was a highly statistically significant difference between the treatment means, at least some of the rats were statistically significantly different from one another, and there was a statistically significant interaction effect between treatments and rats. This is wrong! The analysis is flawed because it is based on the assumption that there is only one error variance and that its value is 21.2. This value is actually the measurement error; that is to say the variation between one reading and another from the same piece of liver. For testing whether the treatment has had any effect, it is the rats that are the replicates, and there were only 6 of them in the whole experiment. Note also that the way the rats have been coded makes it look like there are only two rat “groups”. Again this is wrong (see mixed models section below).

One way that we could analyse these data is to use a derived variable analysis.

4.3.2 Derived variables avoid pseudoreplication

The idea is to get rid of the pseudoreplication by averaging over the liver bits and preparations for each rat. A useful way of averaging, in R, is to use aggregate (or tidyverse):

ratsNew <- rats %>%

group_by(Rat, Treatment) %>%

summarise(Glycogen = mean(Glycogen))

ratsNew## # A tibble: 6 x 3

## # Groups: Rat [?]

## Rat Treatment Glycogen

## <fct> <fct> <dbl>

## 1 1 1 132.

## 2 1 2 150.

## 3 1 3 134.

## 4 2 1 148.

## 5 2 2 152.

## 6 2 3 136ratsNew <- aggregate(Glycogen ~ Rat +

Treatment, data = rats, mean)

ratsNew## Rat Treatment Glycogen

## 1 1 1 132.5000

## 2 2 1 148.5000

## 3 1 2 149.6667

## 4 2 2 152.3333

## 5 1 3 134.3333

## 6 2 3 136.0000Here, ratsNew is a new dataset (hopefully shorter than its ancestral dataset) but has the same column headings as rats. (Hence be careful to specify the correct data set using the data argument where necessary.)

ratsNew_lm <- lm(Glycogen ~ Treatment, data = ratsNew)

drop1(ratsNew_lm, test = "F")## Single term deletions

##

## Model:

## Glycogen ~ Treatment

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 132.94 24.589

## Treatment 2 259.59 392.54 27.085 2.929 0.1971This is a statistically valid analysis, but it ignores the uncertainties around the pseudo-replicates, and the interpretation of the response variable is actually the mean of a bunch of measurements, not the measurements themselves. In balanced designs this is OK, but it may not be possible to generate derived variables for some studies (how do you average a categorical response for example)?

4.3.3 Mixed model approach

4.3.3.1 Nested and crossed random effects

The bacterial loads example we saw earlier is an example of a crossed random effect i.e. the cabinet level 1 corresponds to the same cabinet for each of the media levels. In the rats data set this is no longer so, and we have to be careful to get the model formula correct. This section is subtle but important!

Notice that in the rats data the Rat column is coded as a 1 or a 2. This means that we could use the formula:

rats_lmer <- lmer(Glycogen ~ Treatment + (1 | Rat), data = rats)without throwing an error.

In this case we must tell lmer() that Liver is nested within Rat, and Rat within Treatment. We can do this in various ways, but the easiest thing here is to generate a unique coding for each of the 6 rats.

rats <- rats %>%

unite(Rat, Treatment, Rat,

sep = "_", remove = F) %>%

mutate(Rat = factor(

as.numeric(factor(Rat))))rats$Rat <- paste(rats$Treatment,

"_", rats$Rat)

rats$Rat <- factor(

as.numeric(factor(rats$Rat)))We can use nested random effects to account for the hierarchy of measurements:

rats_lmer <- lmer(Glycogen ~ Treatment + (1 | Rat / Liver), data = rats)

drop1(update(rats_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## Glycogen ~ Treatment + (1 | Rat/Liver)

## Df AIC LRT Pr(Chi)

## <none> 245.27

## Treatment 2 247.77 6.4962 0.03885 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Note: the syntax

Rats / Livermeans that theLiverlevels are nested within theRatlevels. In this case we do not have to recodeLiverto be unique, since the nesting ensures thatlmer()knows thatLiver = 1insideRat = 1is different fromLiver = 1insideRat = 2.

The data for the alcohol example in the slides can be found in the “drunk.rds” file. Read this data set into R and:

- Conduct a derived variable analysis, to test for the impact of

fresheneruse on the model fit using a likelihood ratio test. - Produce 97% confidence intervals for the

freshenereffect. - Fit a mixed model using

lmer(), taking care to get the nesting of the random effects correct. - Use a likelihood ratio test to assess the impact of

fresheneron the model fit. Does it matter whether you use REML or ML here, and why? - Produce an estimate of the

freshenereffect with 97% profile confidence intervals. - How do these estimates compare?

4.4 Non-independent data: Split-plot analyses

Split-plot experiments are like nested designs in that they involve plots of different sizes and hence have multiple error terms (one error term for each plot size). They are also like nested designs in that they involve pseudoreplication: measurements made on the smaller plots are pseudoreplicates as far as the treatments applied to larger plots are concerned. This is spatial pseudoreplication, and arises because the smaller plots nested within the larger plots are not spatially independent of one another. The only real difference between nested analysis and split plot analysis is that other than blocks, all of the factors in a split-plot experiment are typically fixed effects, whereas in most nested analyses most (or all) of the factors are random effects.

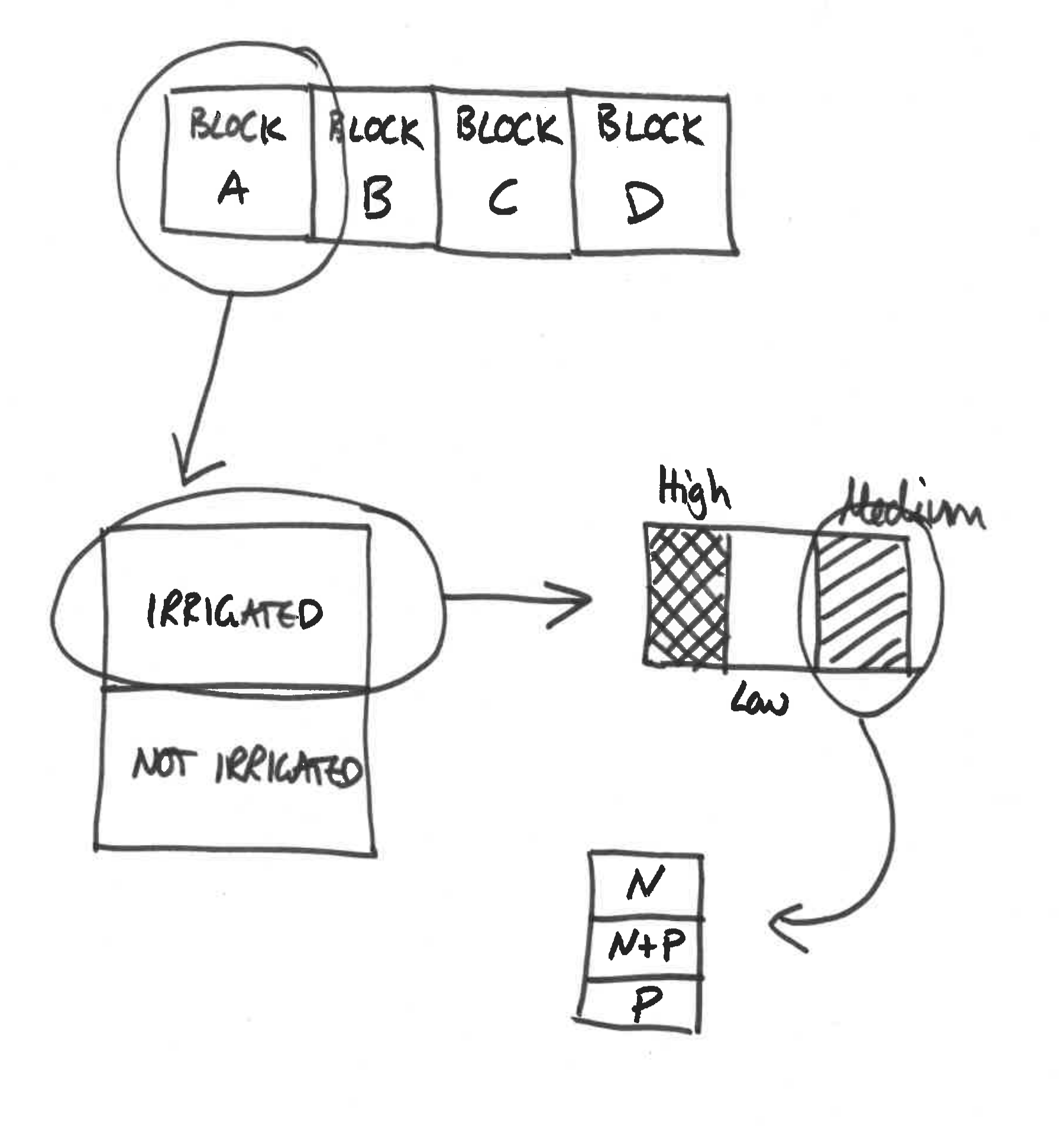

This experiment involves the yield of cereals in a factorial experiment with 3 treatments, each applied to plots of different sizes within 4 blocks. The largest plots (half of each block) were irrigated or not because of the practical difficulties of watering large numbers of small plots. Next, the irrigated plots were split into 3 smaller split-plots and seeds were sown at different densities. Again, because the seeds were machine sown, larger plots were preferred. Finally, each sowing density plot was split into 3 small split-split plots and fertilisers applied by hand (N alone, P alone and N + P together). A schematic is given in Figure 4.3.

Figure 4.3: Schematic for split plot example

The data look like:

| Control | Irrigated | ||||||

|---|---|---|---|---|---|---|---|

| Density / Fertiliser | N | NP | P | N | NP | P | |

| high | 81 | 93 | 92 | 78 | 122 | 98 | |

| Block A | medium | 92 | 92 | 89 | 121 | 119 | 110 |

| low | 90 | 107 | 95 | 80 | 100 | 87 | |

| high | 74 | 74 | 81 | 136 | 132 | 133 | |

| Block B | medium | 98 | 106 | 98 | 99 | 123 | 94 |

| low | 83 | 95 | 80 | 102 | 105 | 109 | |

| high | 82 | 94 | 78 | 119 | 136 | 122 | |

| Block C | medium | 112 | 91 | 104 | 90 | 113 | 118 |

| low | 85 | 88 | 88 | 60 | 114 | 104 | |

| high | 85 | 83 | 89 | 116 | 133 | 136 | |

| Block D | medium | 79 | 87 | 86 | 109 | 126 | 131 |

| low | 86 | 89 | 78 | 73 | 114 | 114 |

These data are available in the “splityield.rds” file. First, let’s read in the data and take a look at it.

splityield <- readRDS("splityield.rds")

head(splityield)

summary(splityield)## yield block irrigation density fertilizer

## 1 90 A control low N

## 2 95 A control low P

## 3 107 A control low NP

## 4 92 A control medium N

## 5 89 A control medium P

## 6 92 A control medium NP## yield block irrigation density fertilizer

## Min. : 60.00 A:18 control :36 high :24 N :24

## 1st Qu.: 86.00 B:18 irrigated:36 medium:24 NP:24

## Median : 95.00 C:18 low :24 P :24

## Mean : 99.72 D:18

## 3rd Qu.:114.00

## Max. :136.004.4.1 Analysing split-plot designs using lmer

Now, how do we set up a mixed effects model to analyse these data? block is the only random effect but our data are nested. Our fixed effects are irrigation, density and fertilizer. Here is the model, including prediction of the variance due to block’s random effect on the intercept of the model.

Note: Ordinarily we would write the nested random effect terms using the syntax:

split_lmer <- lmer(yield ~ irrigation * density * fertilizer +

(1 | block / irrigation / density / fertilizer), data = splityield)## Error: number of levels of each grouping factor must be < number of observationsHowever, if you run this, you will notice that the function returns an error. This is because there are no replicates within the final nesting (i.e. the table above on has a single replicate in each cell). To overcome this we need to remove the final nesting. Hence we can run:

split_lmer <- lmer(yield ~ irrigation * density * fertilizer +

(1 | block / irrigation / density), data = splityield)Aside: Another way of writing

(1 | block / irrigation / density / fertilizer)is:

(1 | block) + (1 | block:irrigation) + (1 | block:irrigation:density) + (1 | block:irrigation:density:fertilizer).Pretty grim eh? However, in this case we note that the final nested random effect only has one replicate per group, so we could simply remove this term. Leaving:

(1 | block) + (1 | block:irrigation) + (1 | block:irrigation:density).This is equivalent to

(1 | block / irrigation / density)

We can now perform model simplification (remembering to refit the model using ML in each case):

drop1(update(split_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## yield ~ irrigation * density * fertilizer + (1 | block/irrigation/density)

## Df AIC LRT Pr(Chi)

## <none> 573.51

## irrigation:density:fertilizer 4 569.00 3.4938 0.4788We can see that the three-way interaction term is not statistically significant, so we can drop it from the model and then test dropping the two-way interaction effects.

split_lmer <- update(split_lmer, ~ . - irrigation:density:fertilizer)

drop1(update(split_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## yield ~ irrigation + density + fertilizer + (1 | block/irrigation/density) +

## irrigation:density + irrigation:fertilizer + density:fertilizer

## Df AIC LRT Pr(Chi)

## <none> 569.00

## irrigation:density 2 576.71 11.7088 0.002867 **

## irrigation:fertilizer 2 577.05 12.0424 0.002427 **

## density:fertilizer 4 565.19 4.1888 0.381061

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We are thus safe to remove the density:fertilizer interaction term:

split_lmer <- update(split_lmer, ~ . - density:fertilizer)drop1(update(split_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## yield ~ irrigation + density + fertilizer + (1 | block/irrigation/density) +

## irrigation:density + irrigation:fertilizer

## Df AIC LRT Pr(Chi)

## <none> 565.19

## irrigation:density 2 572.90 11.709 0.002867 **

## irrigation:fertilizer 2 572.34 11.144 0.003803 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1We thus have a simplified final model. If you really want to know all the coefficients, type summary(split.lmer), but here we will try to understand the interaction terms graphically.

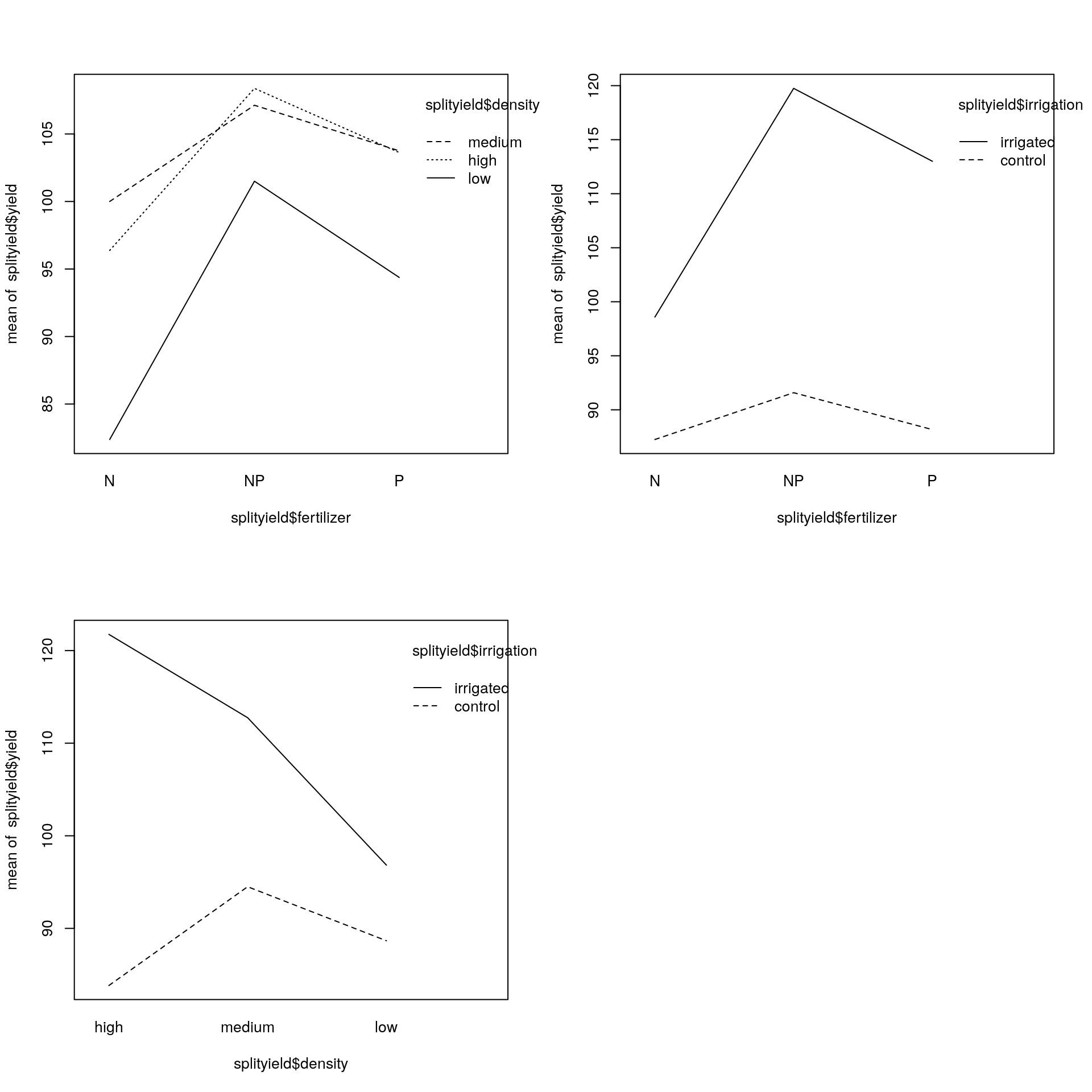

A useful way to understand these is to use the interaction.plot() function. The variables are listed in a non-obvious order: first the factor to go on the \(x\)-axis, then the factor to go as different lines on the plot, then the response variable.

There are 3 plots to look at so we make a \(2 \times 2\) plotting area:

par(mfrow = c(2, 2))

interaction.plot(splityield$fertilizer, splityield$density, splityield$yield)

interaction.plot(splityield$fertilizer, splityield$irrigation, splityield$yield)

interaction.plot(splityield$density, splityield$irrigation, splityield$yield)

par(mfrow = c(1, 1))

The really pronounced interaction is that between irrigation and density, with a reversal of the high to low density difference on the irrigated and control plots. Overall, the 3-way interaction was not statistically significant, nor was the 2-way interaction between density and fertilizer. The 2-way interaction between irrigation and fertilizer, however, was highly statistically significant.

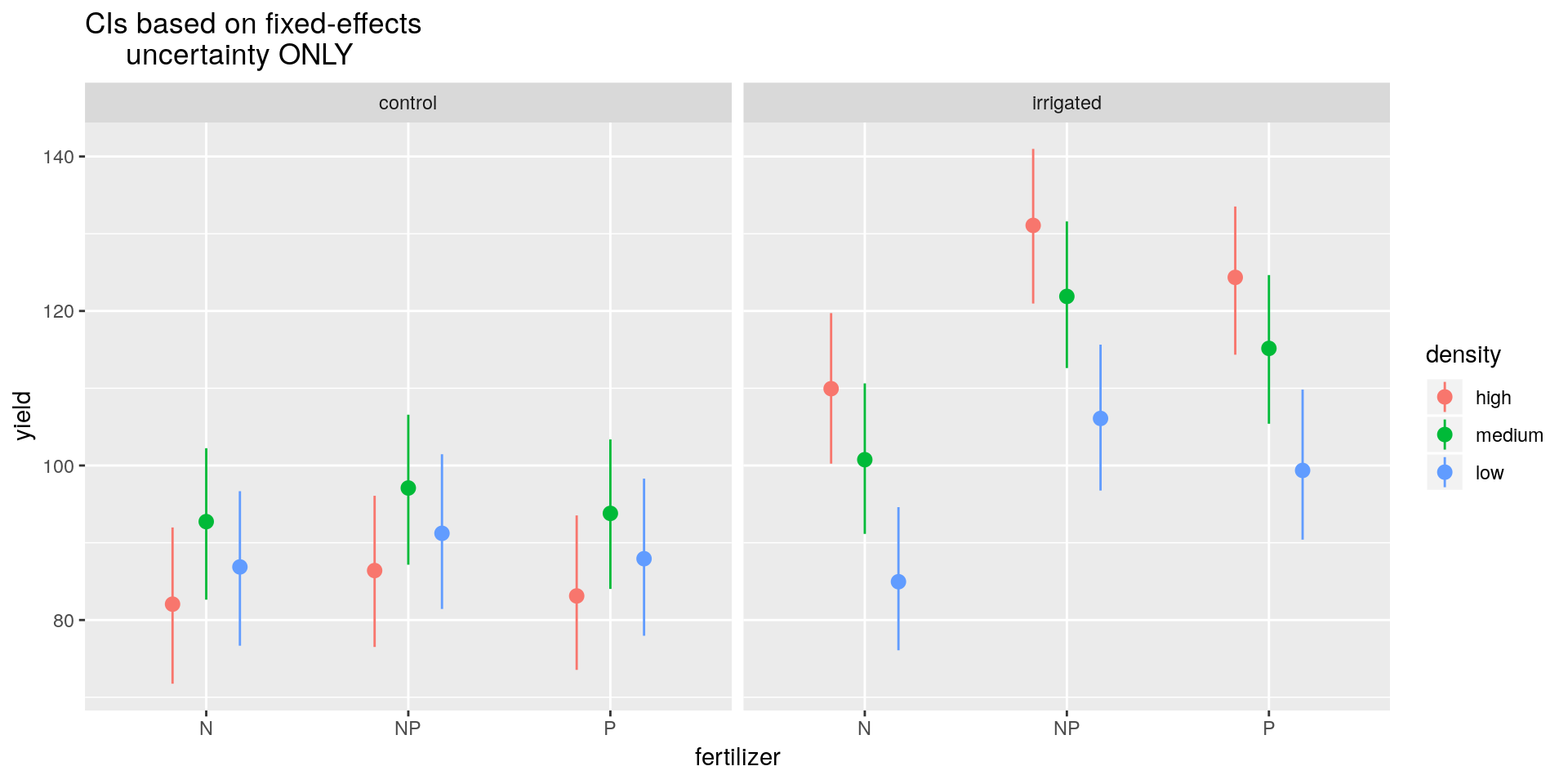

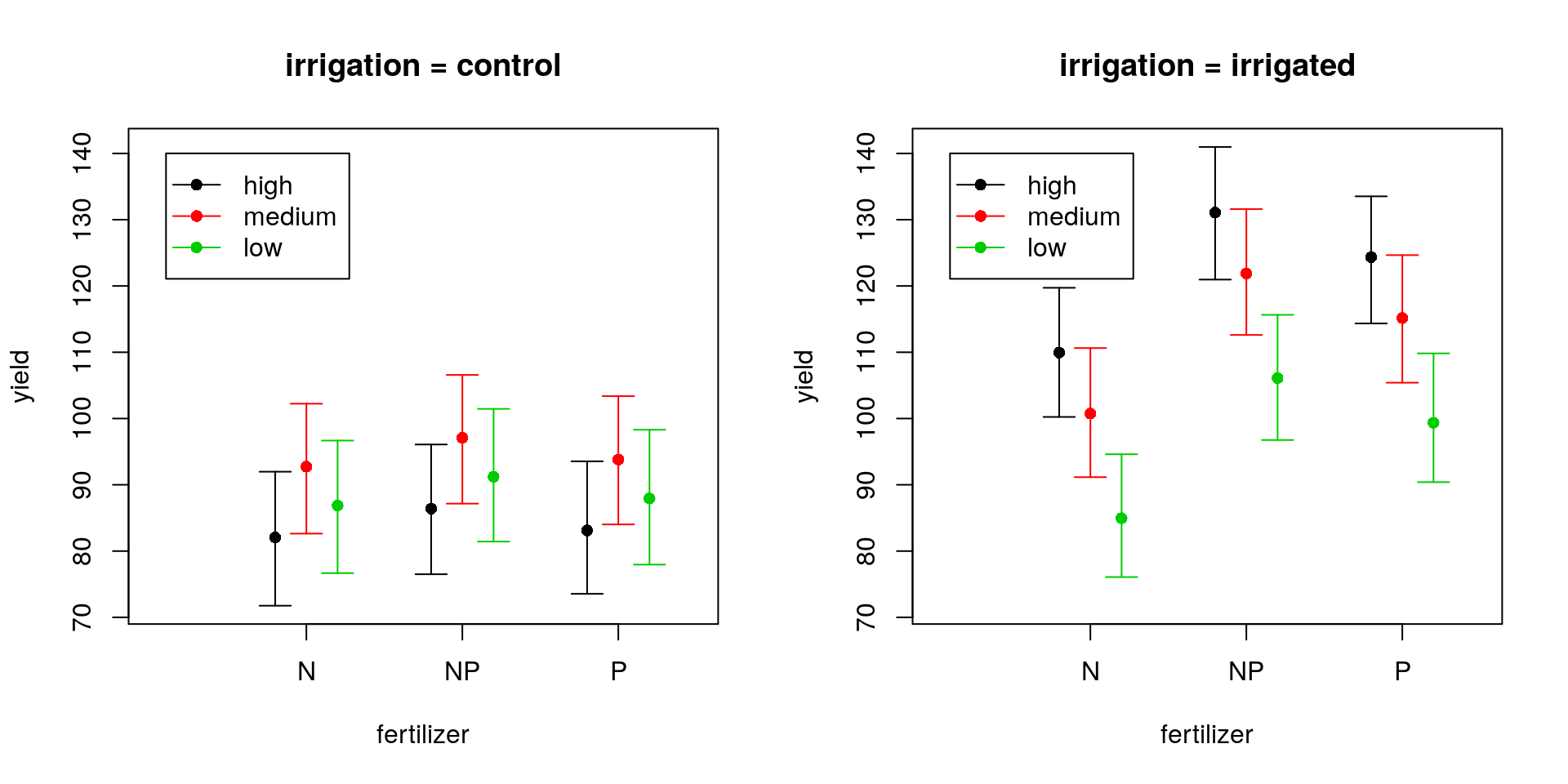

Another way to visualise the model outputs would be to generate a plot of the predicted yield (plus confidence intervals), for each combination of explanatory factors. I have created a data.frame with these predictions using bootstrapping. The code to replicate this can be found here, but is a bit time consuming to run, so I have included the predictions as a file called “split_pred.rds”.

First, read in the predictions:

split_pred <- readRDS("split_pred.rds")Now to plot these predictions (note, once again these ease of ggplot2 compared to base R graphics):

In ggplot2 we can use the geom_pointrange() function, a colour aesthetic and a facet_wrap().

To plot the CIs in base R, we can proceed as follows:

- Set the figure layout to have two figures side-by-side.

- Calculate limits for the \(y\)-axis based on the range of the CIs to be plotted.

- Calculate manual limits for the \(x\)-axis to enable us to jitter the error bars around each fertiliser level.

- For each level of

irrigation:- Extract the subset of data corresponding to the level of

irrigation. - Produce an empty plot (setting the \(y\)-limits and \(x\)-limits). Remove the \(x\)-axis (using the

xaxt = "n"argument). This latter step is so that we can add thefertilizerlabels manually in the next step. - For each level of

density:- Extract the subset of data corresponding to the level of

density. - Sort this so that the levels of

fertilizerare the same at each stage of theiloop11. - Add points for the estimates and add confidence intervals using the

arrows()function12. Notice that I have used aforloop to allow me to plot the three CIs for each level offertilizer. All that this loop does is to run thearrows()command 3 times, substitutingjeach time for the corresponding row of the sorted data set (which will be of length three here). You could do this explicitly in three lines of code if you prefer. Notice that I’ve jittered the points slightly so that the three levels ofdensitycan be plotted side-by-side.

- Extract the subset of data corresponding to the level of

- Add a new \(x\)-axis and set appropriate labels for the points.

- Add a legend.

- Extract the subset of data corresponding to the level of

Phew! (Now check out the tidyverse solution and tell me it’s not worth the effort to learn ggplot2!)

## plot confidence intervals

ggplot(newdata,

aes(x = fertilizer, colour = density)) +

geom_pointrange(

aes(y = yield, ymin = lci, ymax = uci),

position = position_dodge(width = 0.5)) +

facet_wrap(~ irrigation) +

labs(title = "CIs based on fixed-effects

uncertainty ONLY")

## set plot layout

par(mfrow = c(1, 2))

## set plot limits

ylims <- min(newdata[, -c(1:3)])

ylims <- c(ylims, max(newdata[, -c(1:3)]))

xlims <- c(0, 3.5)

for(m in levels(newdata$irrigation)) {

## extract control predictions

temp <- newdata[newdata$irrigation == m, ]

## produce empty plot

plot(NULL, ylim = ylims, xlim = xlims,

xaxt = "n", main = paste("irrigation =", m),

xlab = "fertilizer", ylab = "yield")

## plot yield against fertiliser stratified by density

for(i in 1:length(levels(newdata$density))) {

temp1 <- temp[

temp$density == levels(newdata$density)[i], ]

temp1 <- temp1[order(temp1$fertilizer), ]

for(j in 1:nrow(temp1)){

points(j - 0.2 * (2 - i), temp1$yield[j],

col = i, pch = 16)

arrows(j - 0.2 * (2 - i), temp1$lci[j],

j - 0.2 * (2 - i), temp1$uci[j],

length = 0.1, col = i,

angle = 90, code = 3)

}

}

## add custom x-axis

axis(side = 1, at = 1:3,

labels = levels(newdata$fertilizer))

## add legend

legend(0.1,

diff(par("usr")[3:4]) * 0.95 + par("usr")[3],

legend = levels(newdata$density),

col = 1:3, lty = 1, pch = 16)

}

## reset plot layout

par(mfrow = c(1, 1))So we can see that in the control group the medium density sowing seems to produce slightly higher yields on average than the low or high density settings, but we note that these differences are not statistically significantly different at the 5% level. However, irrigation tends to not only produce higher yields on average (except for the N fertiliser), but also to favour high density sowing efforts, which are now much preferred over low density sowing efforts (although not too dissimilar from medium density efforts). The model tends to favour the NP fertiliser in all cases, though there’s not much between NP and P.

Note: This approach does now take into account the random effects variances or covariances. It is therefore likely to underestimate the variance. To account for these additional uncertainties is difficult. If you want a more consistent approach, go Bayesian.

4.5 Non-independent data: Absorbing the influence of random effects

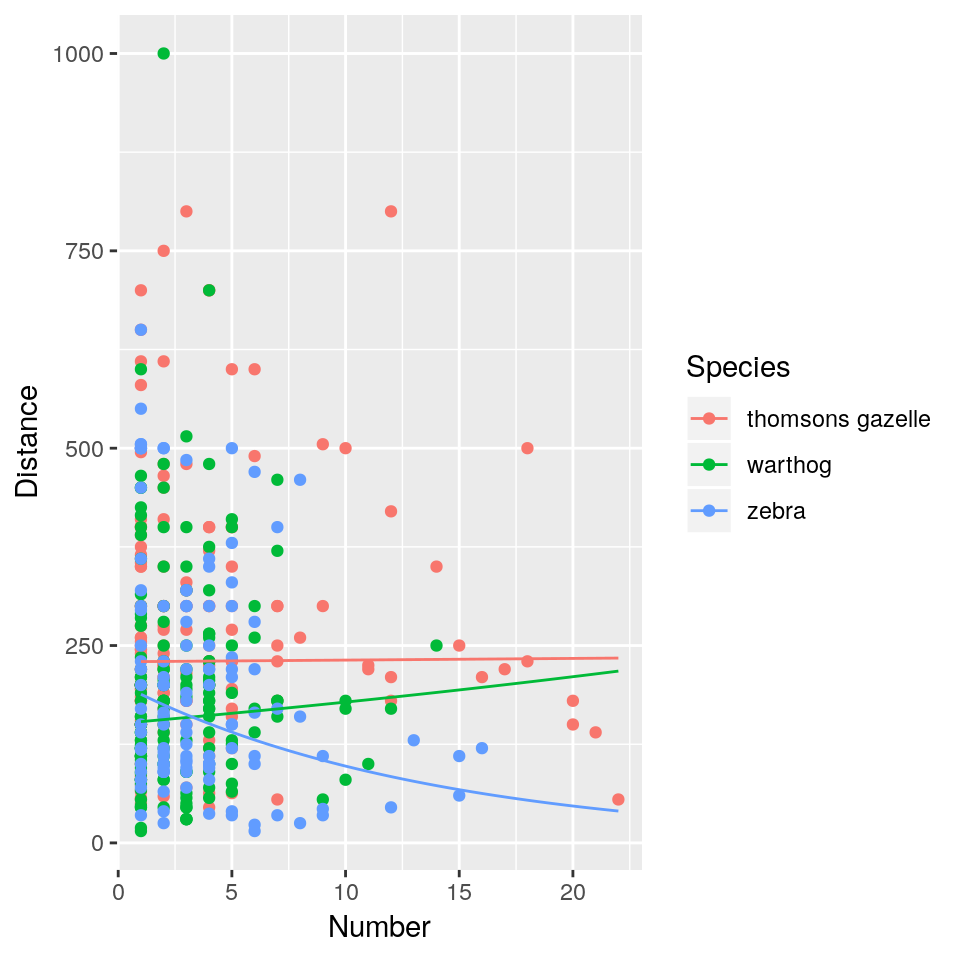

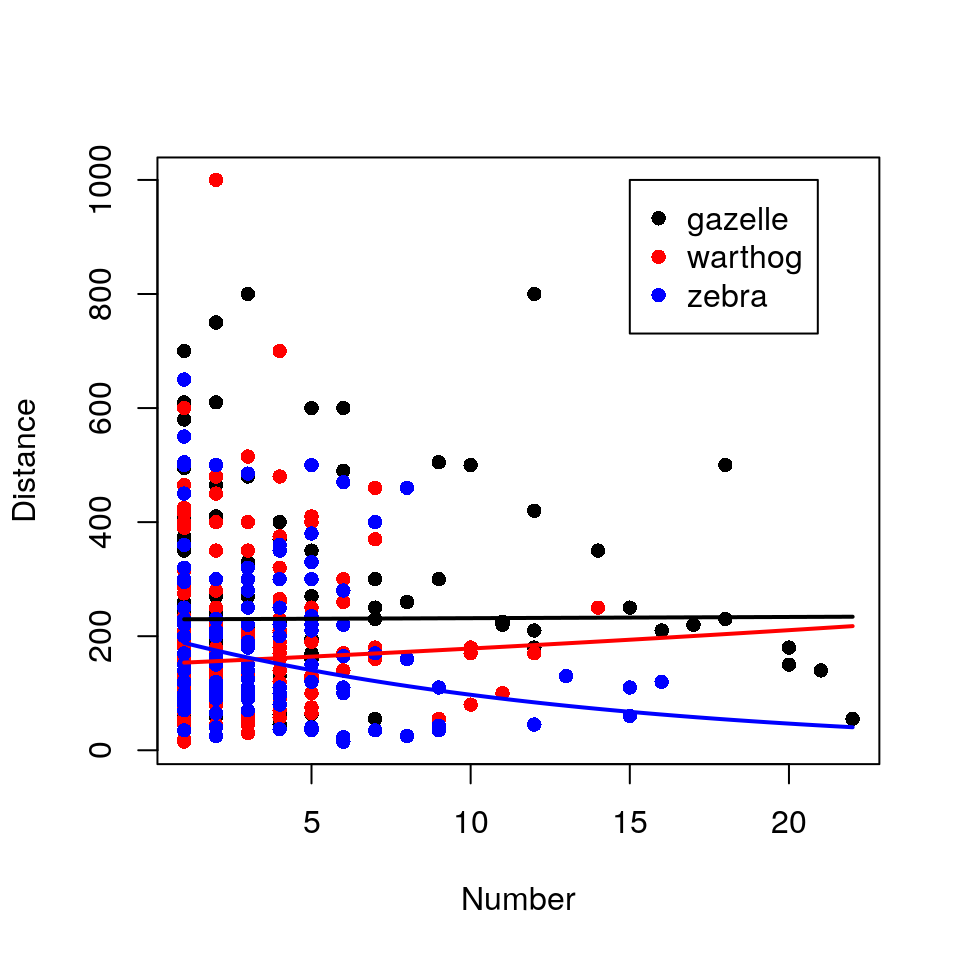

Eight groups of students walked through Hell’s Gate National Park and estimated the distance to groups of individuals of several species of mammalian herbivores. Here we work with data on distances to three key species: Thomson’s gazelle, warthog and zebra. We are interested in whether distances to these animals differ among species, whether distances depend on animal group size, and also whether the relationship between distance and group size differs among species. These data are available in the “hg.rds” file.

A naïve analysis would ignore the identity of the student group, and therefore treat all the distance observations as independent. It would probably fit a lm of distance against species, number and the interaction between species and number. My own exploration of the data suggests that raw distances are skewed, and that a log-transformation improves homoscedasticity and normality of residuals. So before analysing we do this transformation.

hg <- readRDS("hg.rds")hg$ldist <- log(hg$Distance)

hg_lm <- lm(ldist ~ Species * Number, data = hg)

drop1(hg_lm, test = "F")## Single term deletions

##

## Model:

## ldist ~ Species * Number

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 212.51 -346.77

## Species:Number 2 5.3917 217.91 -339.19 5.7846 0.003305 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This suggests a statistically significant interaction between Species and Number (\(F_{2, 456} = 5.78\), \(p = 0.003\)).

summary(hg_lm)##

## Call:

## lm(formula = ldist ~ Species * Number, data = hg)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.32646 -0.39255 0.04353 0.44779 1.85666

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.4357462 0.0689700 78.813 < 2e-16 ***

## Specieswarthog -0.4178168 0.1066872 -3.916 0.000104 ***

## Specieszebra -0.1247300 0.1203792 -1.036 0.300685

## Number 0.0009105 0.0118508 0.077 0.938790

## Specieswarthog:Number 0.0156732 0.0254035 0.617 0.537563

## Specieszebra:Number -0.0742589 0.0238692 -3.111 0.001981 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.6827 on 456 degrees of freedom

## Multiple R-squared: 0.09402, Adjusted R-squared: 0.08409

## F-statistic: 9.465 on 5 and 456 DF, p-value: 1.338e-08The interaction appears to be driven by a decrease in distance with increasing group size, but only in zebras. We can draw the relevant figure (notice that this is where tidyverse comes into its own, with much more concise code resulting in a better plot).

Generate ‘fake’ data in order to plot fitted lines:

newdata <- expand.grid(

Number = seq(min(hg$Number),

max(hg$Number), length = 100),

Species = levels(hg$Species)

)Now get the predicted values for the fake data, and back-transform log-distance to real distances, using the exp command:

newdata <- newdata %>%

mutate(Distance =

exp(predict(hg_lm, newdata)))Now plot the fitted lines against the observed data points:

ggplot(hg, aes(x = Number, y = Distance,

col = Species)) +

geom_point() +

geom_line(data = newdata)

Generate ‘fake’ data in order to plot fitted lines:

newdata <- expand.grid(

Number = seq(min(hg$Number), max(hg$Number),

length = 100),

Species = levels(hg$Species)

)Now get the predicted values for the fake data, and back-transform log-distance to real distances, using the exp command:

newdata$Distance <- exp(predict(hg_lm, newdata))Now plot the fitted lines against the observed data points:

plot(Distance ~ Number, data = hg, type = "n")

points(Distance ~ Number, data = hg,

subset = (Species == "thomsons gazelle"),

pch = 16)

points(Distance ~ Number, data = hg,

subset = (Species == "warthog"),

pch = 16,

col = "red")

points(Distance ~ Number, data = hg,

subset = (Species == "zebra"),

pch = 16,

col = "blue")

lines(Distance ~ Number, data = newdata,

subset = (newdata$Species == "thomsons gazelle"),

lwd = 2)

lines(Distance ~ Number, data = newdata,

subset = (newdata$Species == "warthog"),

lwd = 2,

col = "red")

lines(Distance ~ Number, data = newdata,

subset = (newdata$Species == "zebra"),

lwd = 2,

col = "blue")

legend(15, 1000,

pch = rep(16, 3),

col = c("black", "red", "blue"),

legend = c("gazelle", "warthog", "zebra"))

This naïve analysis is fundamentally flawed because the distance estimations are not independent of each other. Several estimates come from each of 8 student groups, and it’s easy to imagine (or remember) that observers vary dramatically in the bias of their distance estimates (and indeed in their estimates of group size, possibly even species identity). It’s entirely possible that a group of observers who estimate “short” distances also encountered strangely large groups of zebras: such a correlation between Group.Name and Distance could drive the observed relationship for zebras.

One solution would be to fit Group.Name as a categorical explanatory variable to our model. This approach has two main problems:

- It wastes valuable degrees of freedom (8 student groups, therefore 7 d.f. as a main effect and 7 d.f. more for each interaction), giving the residual variance fewer d.f. and therefore weakening the power of our analysis.

- It could easily result in a complicated minimal adequate model involving something that we don’t really care about and which does not appear in our hypothesis:

Group.Name.

Instead we would like to be able to absorb the variance in distance estimates among student groups. Such a model would estimate the relationship between Distance, Species and Number for an average group of observers. It could also tell us just how much variance exists among groups of observers.

This is a classic example of where a mixed-effects model is useful. We care about the influence of Species and Number on Distance. These are fixed effects. We know that Group.Name will have an influence on Distance, but the categories of Group.Name are uninformative to the lay reader and cannot be replicated in future field trips. Hence we treat Group.Name as a random effect. An ideal mixed-effects model will test the influence of Species and Number on Distance, meanwhile absorbing the influence of Group.Name.

hg_lmer <- lmer(ldist ~ Species * Number + (1 | Group.Name), data = hg)Note that there is no nesting going on here; Species and Number have the same interpretation regardless of the Group.Name. Let’s take a look at the summary of the fitted model.

summary(hg_lmer)## Linear mixed model fit by REML ['lmerMod']

## Formula: ldist ~ Species * Number + (1 | Group.Name)

## Data: hg

##

## REML criterion at convergence: 814.2

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -4.4891 -0.6386 -0.0128 0.5896 2.6806

##

## Random effects:

## Groups Name Variance Std.Dev.

## Group.Name (Intercept) 0.2085 0.4566

## Residual 0.3046 0.5519

## Number of obs: 462, groups: Group.Name, 8

##

## Fixed effects:

## Estimate Std. Error t value

## (Intercept) 5.4411458 0.1721299 31.611

## Specieswarthog -0.3947870 0.0869880 -4.538

## Specieszebra -0.2975063 0.0997439 -2.983

## Number -0.0001202 0.0099875 -0.012

## Specieswarthog:Number -0.0049066 0.0208474 -0.235

## Specieszebra:Number -0.0289725 0.0199376 -1.453

##

## Correlation of Fixed Effects:

## (Intr) Spcswr Spcszb Number Spcsw:N

## Speciswrthg -0.216

## Specieszebr -0.173 0.362

## Number -0.235 0.415 0.318

## Spcswrthg:N 0.101 -0.710 -0.148 -0.460

## Spcszbr:Nmb 0.084 -0.196 -0.747 -0.416 0.200This summary table is packed with information. First, we can see that the standard deviation in Distance among student groups is 0.46, which is almost as big as the residual standard deviation of 0.55. This suggests variation among observers is important and could dramatically change our results. Second, the estimates of slopes and differences between species are going in the ‘same direction’ as the estimates from the naïve lm() analysis, but seem to be smaller.

With unbalanced experimental designs like this, the fixed effects part of the model cannot be simplified in the usual way. This is because the removal of a fixed effect will fundamentally change the model fit: it’s not just a case of dumping the deviance explained by a fixed effect into the residual deviance, because the residual deviance is different for each nested level of the experimental design. Furthermore, if the experiment is unbalanced, we should beware of using F-tests to check the statistical significance of terms. Instead, we should use AIC or likelihood ratio tests, and if we do this we need to change the fitting mechanism from REML to ML whilst performing LRT. (We do this in situ using the update() function.)

drop1(update(hg_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## ldist ~ Species * Number + (1 | Group.Name)

## Df AIC LRT Pr(Chi)

## <none> 800.99

## Species:Number 2 799.15 2.1605 0.3395As usual, likelihood ratio tests in this context have a chi-squared distribution with d.f. equal to the difference in d.f. between the models. Here we have 8 - 6 = 2 d.f. So, here we find no evidence for an interaction between Species and Number (\(X^2_2 = 2.16\), \(p = 0.34\)).

Since we have an unbalanced design here, we can continue model simplification by dropping each of the main effect terms from the current model and seeing which has the largest impact on model fit (in this case the model simplification will be different depending on the order in which variables are added/removed from the model).

## set baseline model from previous round

hg_lmer <- update(hg_lmer, ~ . - Species:Number)

## now drop terms compare to baseline model

drop1(update(hg_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## ldist ~ Species + Number + (1 | Group.Name)

## Df AIC LRT Pr(Chi)

## <none> 799.15

## Species 2 849.64 54.490 1.471e-12 ***

## Number 1 797.68 0.528 0.4674

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Here dropping Number has little impact on the model fit, whereas dropping Species results in a statistically significantly inferior fit. Hence the next stage is to drop Number. Therefore our baseline model now has just the main effect for Species. The final stage is to drop Species and assess the change in fit relative to our current model.

Species before?

## set baseline model from previous round

hg_lmer <- update(hg_lmer, ~ . - Number)

## now drop terms compare to baseline model

drop1(update(hg_lmer, REML = F), test = "Chisq")## Single term deletions

##

## Model:

## ldist ~ Species + (1 | Group.Name)

## Df AIC LRT Pr(Chi)

## <none> 797.68

## Species 2 847.64 53.965 1.913e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This suggests there is a clear effect of Species identity on log(Distance) (\(X^2_2 = 54.0\), \(p < 0.001\)).

Now we’d like to see our model summary (notice that R has automatically kept the REML fit; since we only converted the model objects to use ML during the model comparison exercise):

summary(hg_lmer)## Linear mixed model fit by REML ['lmerMod']

## Formula: ldist ~ Species + (1 | Group.Name)

## Data: hg

##

## REML criterion at convergence: 797.1

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -4.4743 -0.6479 -0.0538 0.5904 2.6838

##

## Random effects:

## Groups Name Variance Std.Dev.

## Group.Name (Intercept) 0.2161 0.4649

## Residual 0.3042 0.5516

## Number of obs: 462, groups: Group.Name, 8

##

## Fixed effects:

## Estimate Std. Error t value

## (Intercept) 5.43619 0.17008 31.962

## Specieswarthog -0.41088 0.06060 -6.780

## Specieszebra -0.40508 0.06627 -6.113

##

## Correlation of Fixed Effects:

## (Intr) Spcswr

## Speciswrthg -0.179

## Specieszebr -0.164 0.466This final summary tells us that the standard deviation among observer groups is almost as big as the residual standard deviation in distances. It tells us that both warthogs and zebra are closer to the observer than Thomson’s gazelles, which are approximately \(e^{5.44} = 230\) metres away on average. The number of animals in a group has negligible influence on distance given the uncertainties in the data.

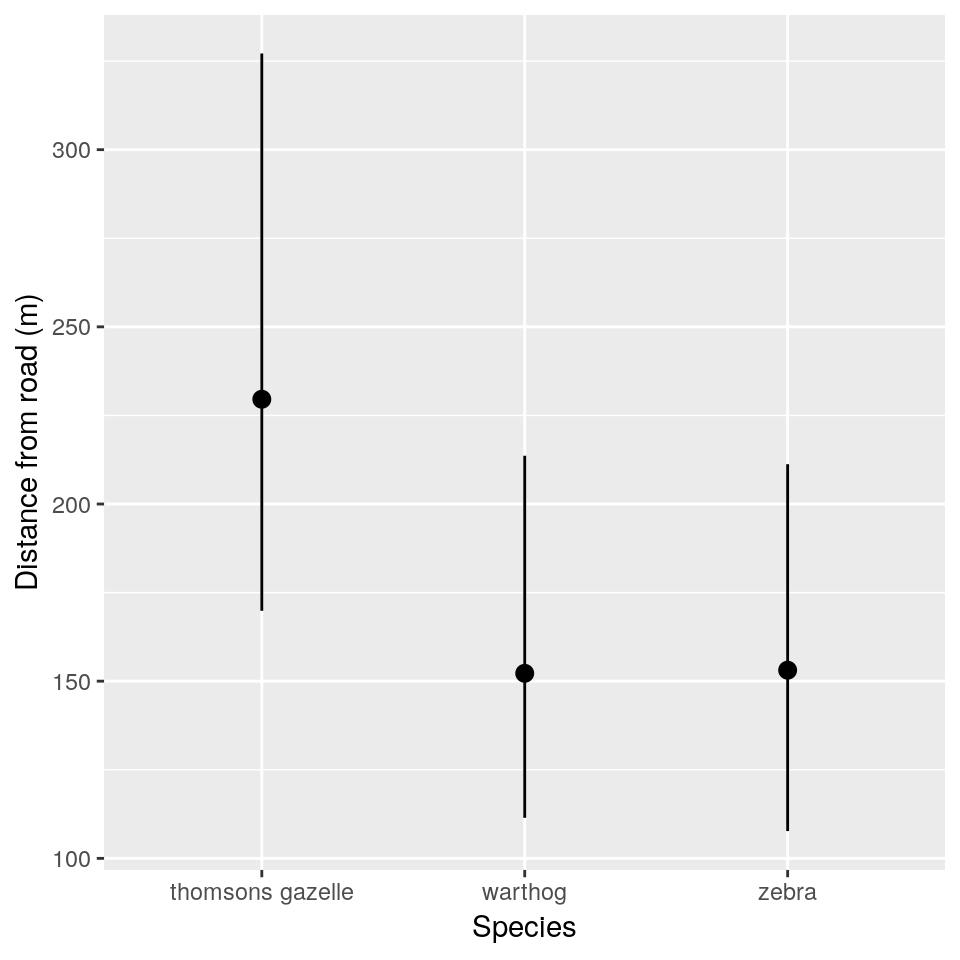

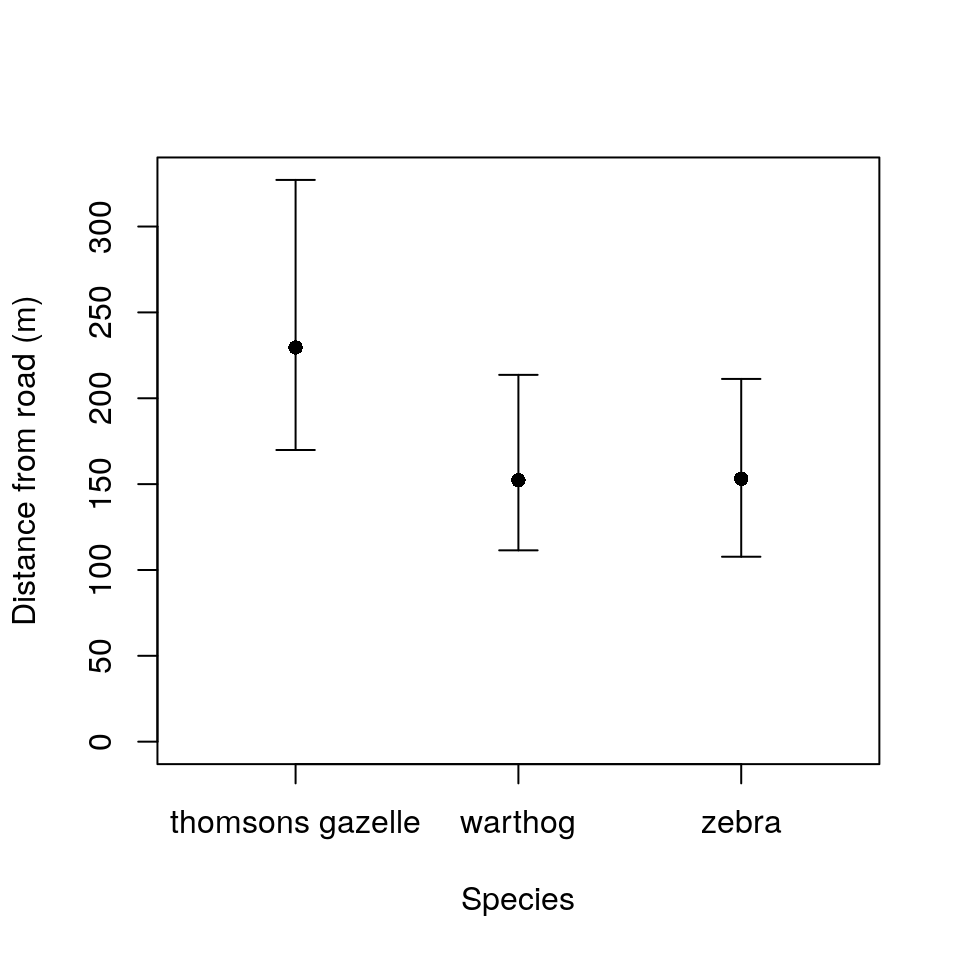

We’re now left with a final model that has a single categorical variable. We could provide a boxplot of the raw data to describe it, but in this instance it would be misleading because it would not differentiate between observer-level noise and residual noise. In this instance we will produce confidence intervals for the mean distance from the road for each species and plot them.

The simplest way to generate confidence intervals for each of the three parameters of the model is to refit the model three times, changing the baseline species each time and using the confint function to extract the confidence intervals (converting to the correct scale at the end). For consistency we’ll use a bootstrap method to generate the intervals (using 500 replicates, which is the default, though you might want to change to a larger number in practice). (Note that you could use a predict() solution as in the earlier example, which is often easier for more complex predictions.)

hg_cis <- matrix(NA, length(levels(hg$Species)), 3)

hg_spec <- levels(hg$Species)

for(i in 1:length(hg_spec)){

hg$Species <- relevel(hg$Species, ref = levels(hg$Species)[i])

hg_lmer <- update(hg_lmer, data = hg)

hg_cis[i, ] <- c(

coef(summary(hg_lmer))[ , "Estimate"][1],

confint(hg_lmer, quiet = T, method = "boot")[3, ]

)

}

hg_cis <- exp(hg_cis)

hg_cis <- as.data.frame(hg_cis)

colnames(hg_cis) <- c("mean", "lci", "uci")

hg_cis$Species <- hg_spec

hg_cis## mean lci uci Species

## 1 229.5666 169.8597 327.1454 thomsons gazelle

## 2 152.2176 111.4347 213.6262 warthog

## 3 153.1030 107.7038 211.2413 zebraIn ggplot2 we can use the geom_pointrange() function.

ggplot(hg_cis, aes(x = Species)) +

geom_pointrange(

aes(y = mean, ymin = lci,

ymax = uci)) +

xlab("Species") +

ylab("Distance from road (m)")

To plot the CIs in base R, we can proceed as follows:

- Calculate limits for the \(y\)-axis based on the range of the CIs to be plotted.

- Calculate manual limits for the \(x\)-axis to enable the species names to render correctly.

- Produce a scatterplot of points defined on the ranges set above, but remove the \(x\)-axis (using the

xaxt = "n"argument). This latter step is so that we can add the species labels manually in the next step. - Add a new \(x\)-axis and set appropriate labels for the points.

- Add confidence intervals using the

arrows()function. Notice that Ive used aforloop to allow me to plot the three CIs in one line of code as in the earlier example. You could do this explicitly in three lines of code if you prefer.

ylims <- c(0, max(hg_cis[, -ncol(hg_cis)]))

xlims <- c(0.5, 3.5)

plot(1:nrow(hg_cis), hg_cis$mean,

xlab = "Species",

ylab = "Distance from road (m)",

xaxt = "n", pch = 16, ylim = ylims,

xlim = xlims)

axis(1, 1:nrow(hg_cis), hg_cis$Species)

for(i in 1:nrow(hg_cis)) {

arrows(i, hg_cis$lci[i],

i, hg_cis$uci[i],

code = 3,

angle = 90,

length = 0.1)

}

4.6 Model checking with mixed models

We need to be just as conscious of testing the assumptions of mixed effects models as we are with any other. The assumptions are:

- Within-group errors are independent and normally distributed with mean zero and variance \(\sigma^2\).

- Within-group errors are independent of the random effects.

- Random effects are normally distributed with mean zero.

- Random effects have a covariance matrix \(\Psi\) that does not depend on the group… this is rather advanced.

- Random effects are independent for different groups, except as specified by nesting… I’m not really sure what this means.

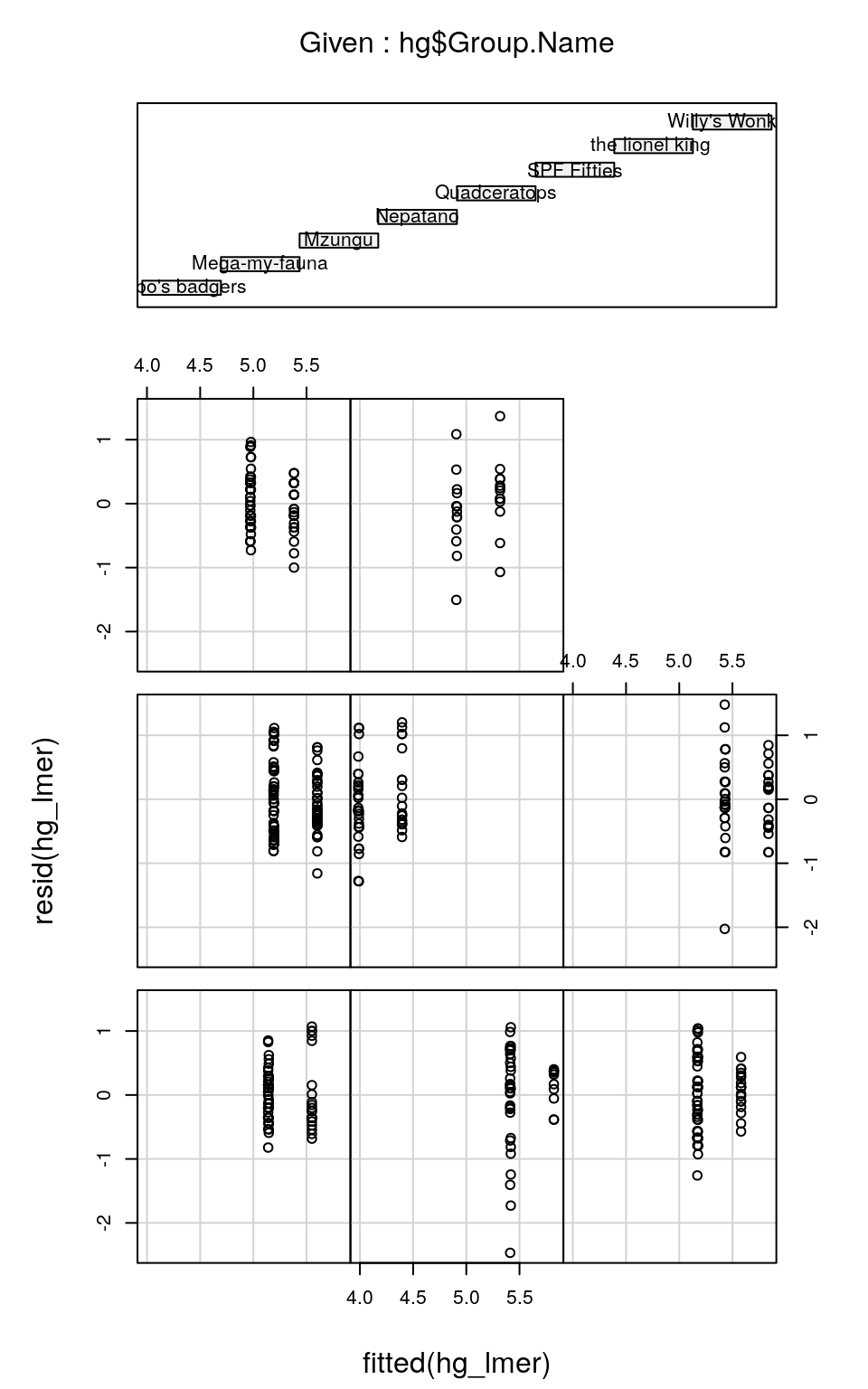

Several model check plots would help us to confirm/deny these assumptions, but note that QQ-plots may not be relevant because of the model structure. Two commonly-used plots are:

- A simple plot of residuals against fitted values, irrespective of random effects (note we have to do this “by hand” here):

plot(resid(hg_lmer) ~ fitted(hg_lmer))

It can often be useful to check the distribution of residuals in each of the groups (e.g. blocks) to check assumptions 1 and 2. We can do this by plotting the residuals against the fitted values, separately for each level of the random effect, using the precious coplot function:

coplot(resid(hg_lmer) ~ fitted(hg_lmer) | hg$Group.Name)

You’ll notice that each sub-figure of this plot, which refers to an individual group of observers, doesn’t contain much data. Therefore it’s hard to judge whether the spread of residuals around fitted values is the same for each observer group. But, with better-replicated experiments (i.e. with many more animals seen per observer group) we could check that the residuals are homoscedastic both within and among observers. (Note that the order of the coplots relating to the groups denoted in the top plot is left-to-right, bottom-to-top: so bilbo's badgers are bottom-left, Mega-my-fauna are bottom-middle and so on…)

4.7 Why aren’t GLMs much good at modelling non-independent data?

This is a short section, but very important. When you perform ANOVA or linear regression with a Gaussian (normal) error structure, you can use F-tests which are based on ‘model’ and ‘residual’ mean-squares or deviances (remember an F-statistic is the ratio of two variances). When data are non-independent, this is really useful because you can choose which part of the experimental design structure from which to select your residual deviance. Remember that you can also use likelihood ratio testing, in which case the chi-squared test statistic is an exact test (although subtlely different to the F-test).

When you perform Generalised Linear Modelling with non-normal error structures, you have to use likelihood ratio testing (i.e. chi-square tests). In the case of a standard GLM, then the LRT test statistic is asymptotically chi-squared (i.e. it’s approximately chi-squared, where the approximation gets better as the sample size gets bigger). However, these are based only on ‘model’ deviance and do not take into account residual deviance. Hence a model of non-independent data cannot provide correct chi-square tests because they ignore the nesting in the experimental design.

Moral: It’s far better to use Generalised Linear Mixed Modelling in these cases.

4.8 Generalised Linear Mixed Modelling (GLMM)

Obviously given your expertise with non-normal data and error structures, by now (if you’re still reading) you’ll be craving a mixed-effects modelling technique that copes with Poisson, binomial, quasi etc. errors: glmer() gives it a good try.

As an example, suppose our hypotheses change. Now we believe that the group sizes vary among species and with distance from the road. Using Number as a response variable is unlikely to have normal residuals (I’m confident). If this was a GLM, we would start by trying family = poisson, because the data are counts (close to zero). Miraculously, that’s all we have to do in glmer() as well.

hometime <- glmer(Number ~ Species * ldist + (1 | Group.Name),

data = hg, family = poisson)

summary(hometime)## Generalized linear mixed model fit by maximum likelihood (Laplace

## Approximation) [glmerMod]

## Family: poisson ( log )

## Formula: Number ~ Species * ldist + (1 | Group.Name)

## Data: hg

##

## AIC BIC logLik deviance df.resid

## 2241.8 2270.8 -1113.9 2227.8 455

##

## Scaled residuals:

## Min 1Q Median 3Q Max

## -1.9259 -0.9735 -0.4698 0.5877 9.6966

##

## Random effects:

## Groups Name Variance Std.Dev.

## Group.Name (Intercept) 0.128 0.3577

## Number of obs: 462, groups: Group.Name, 8

##

## Fixed effects:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) 2.50760 0.34501 7.268 3.64e-13 ***

## Specieswarthog -1.84315 0.43850 -4.203 2.63e-05 ***

## Speciesthomsons gazelle -1.11766 0.45641 -2.449 0.014332 *

## ldist -0.23485 0.06493 -3.617 0.000298 ***

## Specieswarthog:ldist 0.32048 0.08687 3.689 0.000225 ***

## Speciesthomsons gazelle:ldist 0.23311 0.08697 2.680 0.007354 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Correlation of Fixed Effects:

## (Intr) Spcswr Spcstg ldist Spcsw:

## Speciswrthg -0.571

## Spcsthmsnsg -0.502 0.413

## ldist -0.920 0.607 0.533

## Spcswrthg:l 0.576 -0.988 -0.417 -0.629

## Spcsthgzll: 0.541 -0.435 -0.989 -0.591 0.451Simplification to find the Minimal Adequate Model is… a challenge for you to complete!

Congratulations.

4.9 Parametric bootstrapping

Bootstrapping is a resampling technique that was designed for inference when it is not possible (or hard) to generate theoretical sampling distributions for particular quantities of interest. It was introduced by Bradley Efron in 1979. The name is based on the adage: “to pull oneself up by one’s bootstraps”.

The basic idea is that we can generate empirical sampling distributions for a quantity of interest (e.g. a median for example) by sampling from the underlying system a very large number of times and calculating the quantity in each case. This sampling distribution can be used directly to generate confidence intervals for example. Of course, in practice we cannot sample multiple times from the underlying system (we often only have one replicate—the observed data). Instead, bootstrapping aims to approximate this idea by instead re-sampling with replacement from the observed data, and then generating empirical bootstrapped sampling distributions. These ideas have been developed greatly over the years, but the technique is attractive because it is simple to implement (although the estimates are often slightly biased). lme4 has a function bootMer() that can be used to implement specific types of bootstrapping—in this case we want to use parametric bootstrapping. We do not dwell on the details here, but if you want more information please come and see me.

To generate the confidence intervals used in the split-plot design earlier, you can use the following code:

## new data to predict to

newdata <- expand.grid(

density = levels(splityield$density),

irrigation = levels(splityield$irrigation),

fertilizer = levels(splityield$fertilizer)

)

## prediction function to use in bootstrap routine

predFun <- function(mod) {

predict(mod, newdata = newdata, re.form = NA)

}

## produce 1000 bootstrapped samples

boot1 <- bootMer(split_lmer, predFun, nsim = 1000, type = "parametric", re.form = NA)

## function to produce percentile based CIs

sumBoot <- function(merBoot) {

data.frame(

yield = apply(merBoot$t, 2, function(x){

mean(x, na.rm = T)

}),

lci = apply(merBoot$t, 2, function(x){

quantile(x, probs = 0.025, na.rm = T)

}),

uci = apply(merBoot$t, 2, function(x){

quantile(x, probs = 0.975, na.rm = T)

})

)

}

## bind CIs to newdata

split_pred <- cbind(newdata, sumBoot(boot1))4.10 Bayesian modelling

Many of the challenges associated with mixed effects models go away if you move your inference into a Bayesian framework. That’s not to say that other challenges do not arise in their place, mainly in terms of variable selection, however, in general I would recommend using a Bayesian framework for complex models with hierarchical structures, particularly spatio-temporal modelling.

For GLMMs there is an R package called MCMCglmm that will fit a wide range of models in a Bayesian framework. Alternatively there are general purpose Bayesian packages such as WinBUGS, OpenBUGS, JAGS or more recently Stan.

These approaches are beyond the scope of this course, but we are hoping to run a Bayesian Modelling workshop next year, so keep your ears to the ground!