Introduction

Introduction

These notes are intended as an introduction and guide to using Python for data analysis and research.

As big a part of this workshop as any of the formal aims listed below, is that you should enjoy yourself. Python is a fun language because it is relatively easy to read and tells you all about what you did wrong (or what module was broken) if an error occurs.

With that in mind, have fun, and happy learning!

Structure of this course

The main components of this workshop are these notes and accompanying exercises.

In addition there will be an introductory talk. From there, you’ll be left to work through the material at your own pace with invaluable guidance and advice available from the workshop demonstrators.

Where appropriate, key points will be emphasized via short interjections during the workshop.

Recap: What is Python?

Python is the name of a programming language (created by Dutch programmer Guido Van Rossum as a hobby programming project!), as well as the program, known as an interpreter, that executes scripts (text files) written in that language.

Van Rossum named his new programming language after Monty Python’s Flying Circus (he was reading the published scripts from “Monty Python’s Flying Circus” at the time of developing Python!).

It is common to use Monty Python references in example code. For example, the dummy (aka metasyntactic) variables often used in Python literature are spam and eggs, instead of the traditional foo and bar. As well as this, the official Python documentation often contains various obscure Monty Python references.

Jargon

The program is known as an interpreter because it interprets human readable code into computer readable code and executes it. This is in contrast to compiled programming languages like C, C++, and Java which split this process up into a compile step (conversion of human-readable code into computer code) and a separate execution step, which is what happens when you press on a typical program executable, or run a Java class file using the Java Virtual Machine.

Because of it’s focus on code readability and highly expressive syntax (meaning that programmers can write less code than would be required with languages like C or Java), Python has grown hugely in popularity and is now one of the most popular programming languages in use.

Aims

During this workshop, hopefully you will cover most if not all of the following sections

- Useful Python concepts like

- Comprehensions (e.g.

[ abs(x) for x in y ]) - Context managers (

with) - Generators (

yield)

- Comprehensions (e.g.

- Numerical analysis with Numpy

- Visualizing data with Matplotlib

- Scipy: Additional modules

Optional sections include

- R-like data analysis with Pandas

- Image Processing with Scikit Image (

skimage) & Scipy’s ndimage submodule - Classical User Intefaces with PyQt5

- Web interfaces with Flask

- Scraping data from web resources

- Object Oriented Programming

We will not be delivering hours of lectures on programming constructs and theory, or detailing how every function of every module works.

Printing the notes

For both environmental reasons and to ensure that you have the most up-to-date version, we recommend that you work from the online version of these notes.

A printable, single page version of these notes is available here.

Errata

Please email any typos, mistakes, broken links or other suggestions to j.metz@exeter.ac.uk.

Installing on your own machine

If you want to use Python on your own computer I would recomend using one of the following “distributions” of Python, rather than just the basic Python interpreter.

Amongst other things, these distributions take the pain out of getting started because they include all modules you’re likely to need to get started as well as links to pre-configured consoles that make running Python a breeze.

- Anaconda (Win, MacOS, Linux) : Commercially backed free distribution

- WinPython (Windows Only) : Open-source free distribution

- Linux : Python 2 is preinstalled on older linux distributions; Python 3 should be installed on newer distributions. To install Python 3, simply use your favourite package manager. E.g. on debian based systems (Debian, Ubuntu, Mint), running

sudo apt-get install python3from a terminal will install Python 3. Alternatively use Anaconda.

Note : Be sure to download the Python 3, not 2, and get the correct acrchitecture for your machine (ie 32 or 64 bit).

More (pythonic) Python

You should already be familiar with basic Python including

- Basic data types (numbers, strings)

- Container types like lists and dictionaries (

list,dict) - Controlling program flow with

if-else,for, etc - Reading and writing files

- Defining functions

- Documenting code

- Using modules

If you are unclear on any of these points, please refer back to the introductory notes.

We will now focus on some additional, sometimes Python-specific, concepts. By this I mean that even if you know how to program in C or Fortran, you will possiby not know for example what a list comprehension is!

Less “universally useful” additional Python concepts have been included in the optional “Additional advanced Python” section.

Easier to ask for forgiveness than permission (EAFP): trying

A common programming approach in Python (as opposed to e.g. C) is to try something and catch the exception (i.e. if it fails).

This is as opposed to the approach used in other languages, which is to carefully check all inputs and not expect the exception to happen.

To do this we use a try-except block, for example

# At this point we have a variable called my_var that contains a string

try:

num = float(my_var)

except Exception:

# Calling float on my_var failed, it must not be a

# string representation of a number!

num = 0

i.e. instead of carefully inspecting the string contained my_var to determine

whether we can convert it to a number, the Pythonic approach is to simply

try and convert it to a number and handle the case that that fails in

an except block.

Note

You can technically write

try: ... except: ...i.e. omit the

Exception, but this is known as bare exception and will catch every possible error. AddingExceptionwill still catch almost all errors; without it, you won’t even be able to (immediately) terminate your script with ctrl-c!It is almost always best to specify exactly which type of exception you are going to try and allow to happen using

try-except.That way, if something else unexpected happens, you will be notified and Python will terminate in the usual (helpful!) way.

Common Standard Library modules: os and sys

We’ve already encountered os and sys in the introductory notes.

However, there are some common uses of os and sys functions that

merit special mention.

File-name operations: os.path

When, generating full file-paths, or extracting file directory locations, we could potentially use simple string manipulation functions.

For example, if we want to determine the folder of the path string

"C:\Some\Directory\Path\my_favourite_file.txt"

we might think to split the string at the backslash character “" and

return all but the last element of the resulting list (and then

put them together again).

However, not only is this tedious, but it is also then platform dependent (Linux and MacOS use forward-slashes instead of back-slashes).

Instead, it is much better to use the os.path submodule to

- create paths from folder names using

os.path.join - determine a (full) filepath’s directory using

os.path.dirnameoros.path.realpath - Split a file at the extension (for generating e.g. output files) using

os.path.splitextamongst many more useful file path manipulation functions.

Getting command-line input using sys.argv

sys.argv is a list of command line arguments (starting with the

script file name) - you can access it’s elements to get command line

inputs.

To test and play with this you can simply add the lines

import sys

print(sys.argv)

to the top of any (or an empty) script, and run the script.

If you follow the script file name by (a space and then) additional words,

you will see these words appear in the terminal output as being contained in

sys.argv.

Exercise : Quick test of sys.argv

Create a new script (exercise_sys_argv.py) and firstly make it print out the arguments it was passed from the command line.

Next have the script try to convert all arguments (after the script filename) into numbers and print out the squares of the numbers. For any input that was not a number, have it print an message letting the user know.

Run your script with several inputs (after the script file name) to confirm it works.

Sys Answer

import sys

print(sys.argv)

def convert_value(value, index):

"""

Function that try-s to convert input

to a number, or prints an error message

Input are the value to convert, and it's index for the error message

"""

try:

num = float(value)

print(num, "squared is", num**2)

except:

print("Could not convert value", value, "at", index, "to a number")

for index, val in enumerate(sys.argv[1:]):

convert_value(val, index-1)

For example, running python exercise_sys_argv.py 3 4 generates

the output

['...exercise_sys_argv.py', '3', '4']

3.0 squared is 9.0

4.0 squared is 16.0

A more complete description of command line inputs is provided in the optional “additional advanced Python” section, for those requiring more information and more advanced command line input options.

String formatting

Another common Python task is creating strings with formatted representations of numbers.

You should already know that the print function is good at printing out a variety of

data types for us.

Internally, it creates string representations of non-string data before printint the final

strings to the terminal.

To control that process, we often perform what is know as string formatting. To create a format string use the following special symbols in the string

"%s"will be substituted by a string"%d"will be substituted by the integer representation of a number"%f"will be substituted by the float representation of a number

We can also specify additional formatting contraints in these special codes. For example to create a fixed length integer representation of a number we might use

print( "%.8d"%99 )

which outputs 00000099 to the terminal; i.e. the .8 part of the code meant : “always make the

number 8 characters long, (appending zeros as necessary).

NOTE: The new way of doing this is to use the format member function;

print("{:08d}".format(99))

though the old way also still works!

For additional format string options in the context of the newer format string method, see the

documentation here.

Comprehensions

Comprehensions are losely speaking shorthand ways to quickly generate lists, dictionaries, or generators (see later) from relatively simple expressions.

Consider a for loop used to generate a list that holds the

squares of another list:

list1 = [10, 20, 30, 40]

list2 = []

for val in list1:

list2.append( val * val ) # or equivalently val ** 2

The last 3 lines can be neatly replaced using a list comprehension:

list1 = [10, 20, 30, 40]

list2 = [val*val for val in list1]

That’s it! Simple, clean, and easy to understand once you know how.

In words what this means is: “set list2 to : a new list, where the list

items are equal to val*val where val is equal to each item in list list1“.

list2 will then be equal to [100, 400, 900, 1600].

The list comprehension can work with any type of item, e.g.

list3 = ["spam", "and", "eggs"]

list4 = [ thing.capitalize() for thing in list3 ]

would set list4 equal to ["Spam", "And", "Eggs"].

Similarly you can generate a dictionary (nb. dictionaries are created with braces, aka curly brackets) comprehension

words = ['the', 'fast', 'brown', 'fox']

lengths = {word : len(word) for word in words }

(this generates the dictionary

{'the':3, 'fast':4, 'brown':5, 'fox':3} assigned to lengths)

The last example is using tuple syntax (re: tuples are defined using parentheses, aka round brackets),

list1 = [10, 20, 30, 40]

gen = ( val * val for val in list1)

but the crucial difference is that gen is not a tuple (nor a list). It is a generator object, which we will learn about below.

Adding logic to comprehensions

Comprehensions can also include simple logic, to decide which elements

to include.

For example, if we have a list of files, we might want to filter the files

to only include files that end in a specific extension. This could be done

by adding an if section to the end of the comprehension;

# e.g. file list

file_list = ["file1.txt", "file2.py", "file3.tif", "file4.txt"]

text_files = [f for f in file_list if f.endswith("txt")]

This code would result in the variable text_files holding the list

["file1.txt", "file4.txt"] - i.e. only the strings that ended in “txt”!

Exercise : Reading a file with a comprehension

Create a new script file (“exercise_comprehensions.py”) and add code to load the comma separated value data that we used in the Introductory exercises on loading data ( available here: data_exercise_reading.csv).

After opening the file, you should skip the first row as before, and then load the numerical

data for the second column (“Signal”) directly into a list,

using a list comprehension, not a for-loop.

Then use the built-in function sum (which operates on iterables) to print the sum as well

as len to print the number of elements.

Comprehensions Exercise

You can copy the code from the introductory exercise on file reading, up to the point where you skipped the first line of the file.

After that, you only need to add 1 line to read all the data and convert it into numbers!.

In order to do so, you will need to remember that you can

iterate over a file object (e.g. for line in file_obj1).

Comprehensions Answer

Using e.g.

# Load data from a text (csv) file using a comprehension

# OPTIONALLY use the current script's location as the data folder, if that's where the data

import os

ROOT = os.path.realpath(os.path.dirname(__file__))

fid = open(os.path.join(ROOT, "data_exercise_reading.csv"))

# Skip the first line (ie read it but don't keep the data)

fid.readline()

# Now read just the Signal data

sig = [ int( line.split(',')[1] ) for line in fid]

fid.close()

print("Signal data loaded:")

print("N = ", len(sig))

print("Sum = ", sum(sig))

produces

Signal data loaded:

N = 2000

Sum = 199152

Context managers : with

A context manager is a construct to allocate a resource when you need it and handle any required cleanup operations when you’re done.

One of the most common examples of where context managers are useful in Python is reading/writting files.

Instead of

fid = open('filename.txt')

for line in fid:

print(line)

fid.close()

we can write

with open('filename.txt') as fid:

for line in fid:

print(line)

Not only do we save a line of code, but we also avoid forgetting to close the file and potentially running into errors if we were to try and open the file again later in the code.

Context managers are also used in situations such as in the threading module

to lock a thread, and can in fact be added to any function or class

using contextlib.

If you’re interested in advanced uses of context managers, see e.g.

- https://jeffknupp.com/blog/2016/03/07/python-with-context-managers/

- http://preshing.com/20110920/the-python-with-statement-by-example/

- https://www.python.org/dev/peps/pep-0343/

Otherwise, other than switching to using with when opening files,

you probably won’t encouter them too often!

Useful built-in global variables : __file__, __name__

I sneakily introduced some built-in global variables during the Introductory workshop material - apologies to those of you who wondered what they were and where they came from!

The reason I used these variables (namely __file__ and __name__),

was to make things easier with respect to accessing the current script’s

file name, and creating a section of code that would only run if the script

was being run as a script (i.e. not being imported to another script),

respectively.

Firstly a note about naming; the built-in variables are named using two

leading and trailing underscores (__), which in Python is the convention

for letting other developers know that they shouldn’t change a variable.

This is because other modules and functions will likely also make use of these variables, so if you change their values, you might break these other modules and functions!

To recap, two commonly used built-in global variables:

__file__: holds the name of the currently executing script__name__: holds the name of the current module (or the value"__main__"if the script is run as a script - making it useful for adding a script-running-only section of code)

A brief overview of Objects in Python: The “why” of member functions

You have already used modules in Python. To recap; a module is a library of related functions which can be imported into a script to access those functions.

Using a module, e.g. the build-in os module to access operating-system

related functionality, is as simple as adding

import os

to the top of your script.

Then, a module function is accessed using the dot (“.”) notation:

os.listdir()

would for example return the directory listing for the current working directory.

However, you have also seen dot notation used when accessing functions that we’ve referred to as member functions. A member function refers to a function that belongs to an object.

For example, the built-in function open returns a file object.

This file object has member functions such as readlines which is

used to read the entire contents the file into a list.

The reason we are talking about objects is to stress the difference

between a module function like os.listdir, and a member function

e.g.

the_best_file = open("experiment99.txt")

data = the_best_file.readlines()

Another example of a member function that we’ve already used is

append, which is a member function of list objects.

E.g. we saw

list_out = [] # Here we create a list object

for val in list_in:

list_out.append(val*val) # Here we call the member-function "append"

As long as we are happy that there are modules which are collections of functions, and independently there are objects which roughly speaking “have associated data but also have member functions”, we’re ready to start learning about one of the most important libraries available to researchers, Numpy.

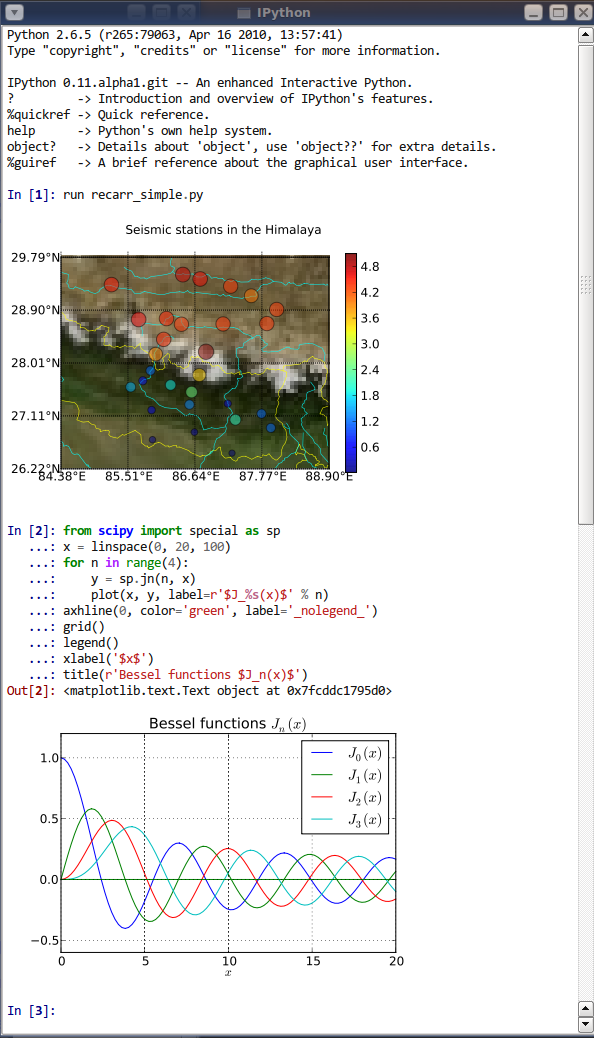

IPython console and Jupyter notebook

Before diving into Numpy in the next section, it’s worth drawing attention to IPython for those of you who haven’t tried it yet.

IPython (for Interactive Python) provides several tools that enhance the Python development experience.

You may have already been using IPython tools (e.g. via Spyder) without being fully aware of it.

The IPython console is an enhanced interactive Python interpreter which imrpoves on the standard interactive interpreter in a number of useful ways:

- syntax highlighting

- tab completion

- Better built-in help system

- Python “magics”, i.e. packaged tools

On Anaconda/WinPython this can be started by opening the IPython Qt Console application, or from a running console by launching ipython3 or jupyter-console.

If Anaconda/WinPython isn’t registered with Windows (i.e. you can’t find it in the Start Menu), you can try using the file explorer to navigate to the Anaconda/WinPython folder (possibly “C:\Anaconda” or “C:\WinPython”) and start the “IPython Qt Console” application.

NOTE The reason that both IPython and Jupyter exist is that IPython was developed first but then (largely in the form of the notebook interface - see below) a more general multi-language (including Julia, Python, R, hence the name, and many others) framework, Jupyter, branched off from the original IPython projct.

Nowadays, Ipython still exists as a Jupyter “kernel”, (amonst many others), and can still be launched via the independent ipython command.

Please note however that while IPython is a great tool it should be just that and resist the temptation to abandon writing your code in scripts!

If you try and write anything vaguely complex in the IPython console, you will quickly find yourself in a pickle when it comes to indentation levels etc, and keeping track of what you did!

In addition variables are all kept in memory making it easy to make mistakes by using a previously defined variable.

The notebook interface

A subequent evolution of the interactive interface provided (first by IPython, now more generally by the Jupyter project) is the notebook.

This is a web interface, which provides cell-based inputs, which Maple or Mathematics users might

find familiar, coupled with inline output, including textual outputs (e.g. from print calls) as

well as inlined plots.

This mix of code and output makes notebooks very attractive for demonstrations, presentations, general teaching, as well as a host of other uses.

However, it should be stressed that while notebooks are very useful for a range of tasks, they are not a good tool for general software development.

There are multiple reasons for this including several major ones

- Preserved state becomes confusing especially when performing out-of-order execution of cells -> leads to repeated use of “restart and run all”, rendering any interactive component much less useful.

- Notebooks tend to promote bad coding practices

- modularisation is harder

- writing tests for code is not straight-forward

- temptation to use non-code cells for documenting code, i.e. not actually documenting code correctly

Exercise : Getting familiar with IPython

For this exercise, instead of writing a script, you’re going to start IPython and get familiar with how to test mini-snippets of code there.

Start the IPython QtConsole, and in the interactive Python session, create variables that hold

- a list holding the numbers

33, 44, 55 - and another that holds a dictionary holding the days of the week (as keys) and the length of the day name (as values)

print your variables to confirm that they hold the required data.

Lastly, type the name of your variable holding the list, then a dot (.) and then press tab.

You should see IPython’s tab completions system pop-up with a list of possible methods. Append the value 66 to the end of the list.

This quick practice of IPython QtConsole operations should get you started in being able to use the console to test small snippets of code!

Exercise : Test out the notebook interface

Launch the jupyter-notebook; a web-page showing the notebook start page should be opened for you.

Practice entering code in cells and evaluating the cells.

In particular, experiment with the %pylab inline magic.

Exercise : Additional notebook-based exercises

If you would like some more exercise based on the content of this page, please download and run the accompanying notebook.

Numerical Analysis with Numpy

Numpy is probably the most significant numerical computing library (module) available for Python.

It is coded in both Python and C (for speed), providing high level access to extremely efficient computational routines.

Basic object of Numpy: The Array

One of the most basic building blocks in the Numpy toolkit is the

Numpy N-dimensional array (ndarray),

which is used for arrays of between 0 and 32

dimensions (0 meaning a “scalar”).

For example,

is a 1d array, aka a vector, of shape (3,), and

is a 2d array of shape (2, 3).

While arrays are similar to standard Python lists (or nested lists) in some ways, arrays are much faster for lots of array operations.

However, arrays generally have to contain objects of the same type in order to benefit from this increased performance, and usually that means numbers.

In contrast, standard Python lists are very versatile in that each list item can be pretty much any Python object (and different to the other elements), but this versatility comes at the cost of reduced speed.

Creating Arrays

ndarrays can be created in a number of ways, most of which directly involve

calling a numpy module function.

From Python objects

Numpy includes a function called array which can be used to

create arrays from numbers, lists of numbers or tuples of numbers.

E.g.

numpy.array([1,2,3])

numpy.array(7)

creates the 1d array [1,2,3], and number (scalar) 7 (albeit as an ndarray!).

Nested lists/tuples produce higher-dimensional arrays:

numpy.array([[1,2], [3,4]])

creates the 2d array

[[1 2]

[3 4]]

NB: you can create arrays from dicts, lists of strings, and other

data types, but the resulting ndarray will contain those objects

as elements (instead of numbers), and might not behave as you expect!

Predefined arrays

There are also array creation functions to create specific types of arrays:

numpy.zeros((2,2)) # creates [[ 0 0]

# [ 0 0]]

numpy.ones(3) # creates [ 1 1 1 ]

numpy.empty(2) # creates [ 6.89901308e-310, 6.89901308e-310]

NB: empty creates arrays of the right size but doesn’t set the values

which start off with values determined by the previous memory content!

Random numbers

numpy.random contains a range of random-number generation functions.

Commonly used examples are

numpy.random.rand(d0, d1, ..., dn) # Uniform random values in a given shape, in range 0 to 1

numpy.random.randn(d0, d1,...,dn) # Standard normally distributed numbers (ie mean 0 and standard deviation 1)

Hint Find more details from an IPython/Jupyter console by typing

help(numpy.random)ornumpy.random?(after having imported numpy!).

For example,

print(numpy.random.rand(5)

produces

[0.97920426 0.03275843 0.07237877 0.4819848 0.71842425]

NB The numbers this generates will be different each time, unless you set the seed.

Loading data from files

One to two dimensional arrays saved in comma-separated text

format can be loaded using numpy.loadtxt:

arr2d = numpy.loadtxt('filename.csv', delimiter=",") # The default delimiter is a space!

Similarly an array can be saved to the same format:

numpy.savetxt('filename.csv', arr2d_2, delimiter=",") # The default delimiter is a space!)

Numpy also has an internal file format that can save and load N-dimensional arrays,

arrNd = numpy.load('inputfile.npy')

and

numpy.save('outputfile.npy', arrNd2)

NOTE: As usual, full paths are safer than relative paths, which are used here for brevity.

However, these files are generally not usable with other non-python programs.

Exercise : Create and save an array

Create a new script (“exercise_numpy_generate.py”) where you create and save a normally distributed random 1d array with 1000 values.

The array should have an offset (~mean value) of 42 and a standard deviation of 5.

Save the array to a csv file, (remember to set the delimiter to “,”) in your scripts folder, called “random_data.csv”.

Add a final line to the script that uses the os module’s listdir function

to print csv files contained in the scripts folder.

Excercise

As mentioned above, normally distributed random numbers are generated

using the numpy.random.randn.

The numbers generated using the function are zero-mean and have a standard deviation of 1.

Changing the offset and standard deviation can be done by adding/subtracting and multiplying the resulting numbers, respectively.

The default delimiter of the savetxt function is the space character,

so we need to change it to a comma to get comma separated values.

os contains operating system related functions and submodules; the

os.path submodule allows us to interact with the file system in a

platform-independent way.

Answer

Using just a few lines:

# Test generating random matrix with numpy

# I tend to like np as an alias for numpy

import numpy as np

import os

# Use the following line to get the script folder full path

# If you'd like to know more about this line, please ask

# a demonstrator

SCRIPT_DIR = os.path.realpath( os.path.dirname( __file__ ))

testdata = 5*np.random.randn( 1000 ) + 42

# NB: the default delimiter is a whitespace character " "

np.savetxt(os.path.join(SCRIPT_DIR, "random_data.csv"), testdata, delimiter=",")

print("\n".join([f for f in os.listdir(SCRIPT_DIR) if f.endswith('csv')]))

Produces a file listing that includes the required data file;

growth_data.csv

data_exercise_reading.csv

random_data.csv

Working with ndarrays

Once an array has been loaded or created, it can be manipulated using either array object member functions, or numpy module functions.

Operations, member attributes and functions

+ Add

- Subtract

* Multiply

/ Divide

// Divide arrays ("floor" division)

% Modulus operator

** Exponent operator (`pow()`)

# Logic

& Logical AND

^ Logical XOR

| Logical OR

~ Logical Not

# Unary

- Negative

+ Positive

abs Absolute value

~ Invert

and comparison operators

== Equals

< Less than

> More than

<= Less than or equal

>= More than or equal

!= Not equal

Many of these operators will work with a variety of operands (things on either side of the arithetic operator).

For example

A = np.array([1, 2, 3])

B = np.array([4, 5, 6])

print(10 * A)

print(A * B)

results in

[10, 20, 30]

[ 4, 10, 18]

Python examines the operands and determines which operation (e.g. here, scalar multiplication or element-wise array multiplication) should be performed.

NB: If you are wondering how to perform the more standard matrix product (aka matrix multiplication),

see the function numpy.dot or numpy.matmul, also applied using the @.

Alternatively, use the more specialized numpy.matrix class (instead of the numpy.ndarray which we

have been using); then the multiplication operator, *, will produce the matrix product.

ndarrays are objects as covered briefly in the last section,

and have useful attributes (~ data associated with the array).

These are accessible using object dot notation, so for example if

you create a numpy array and assign it to variable A, i.e.

A = np.array([1,2,3])

you can access it’s attributes using e.g.

A.shape

Some useful member attributes are

shape Tuple of array dimensions.

ndim Number of array dimensions.

size Number of elements in the array.

dtype Data-type of the array’s elements.

T Same as self.transpose(), except that self is returned if self.ndim < 2.

real The real part of the array.

imag The imaginary part of the array.

flat A 1-D iterator over the array.

There are also many useful member-functions including:

reshape Returns an array containing the same data with a new shape.

transpose Returns a view of the array with axes transposed.

swapaxes Return a view of the array with axis1 and axis2 interchanged.

flatten Return a copy of the array collapsed into one dimension.

nonzero Return the indices of the elements that are non-zero.

sort Sort an array, in-place.

argsort Returns the indices that would sort this array.

min Return the minimum along a given axis.

argmin Return indices of the minimum values along the given axis of a.

max Return the maximum along a given axis.

argmax Return indices of the maximum values along the given axis.

round Return array with each element rounded to the given number of decimals.

sum Return the sum of the array elements over the given axis.

cumsum Return the cumulative sum of the elements along the given axis.

mean Returns the average of the array elements along given axis.

std Returns the standard deviation of the array elements along given axis.

any Returns True if any of the elements of a evaluate to True.

For example, if you wanted to change the shape of an array from 1-d to 2-d, you can use reshape,

A = np.array([1, 2, 3, 4, 5, 6])

print(A)

A = A.reshape(2,3)

print(A)

A = A.reshape(6)

print(A)

would show the “round-trip” of this conversion;

[1 2 3 4 5 6]

[[1 2 3]

[4 5 6]]

[1 2 3 4 5 6]

Non-member functions

Additional functions operating on arrays exist, that the Numpy developers felt shouldn’t be included as member-functions.

This is done for technical reasons releated to keeping the “overhead” of

the numpy.ndarray small.

These include

# Trigonometric and hyperbolic functions

numpy.sin Sine

numpy.cos Cosine

numpy.tan Tangent

# And more trigonometric functions like `arcsin`, `sinh`

# Statistics

numpy.median Calculate the median of an array

numpy.percentile Return the qth percentile

numpy.histogram Compute the histogram of data (does binning, not plotting!)

# Differences

numpy.diff Element--element difference

numpy.gradient Second-order central difference

# Manipulation

numpy.concatenate Join arrays along a given dimension

numpy.rollaxis Roll specified axis backwards until it lies at given position

numpy.hstack Horizontally stack arrays

numpy.vstack Vertically stack arrays

numpy.repeat Repeat elements in an array

numpy.unique Find unique elements in an array

# Other

numpy.convolve Discrete linear convolution of two one-dimensional sequences

numpy.sqrt Square-root of each element

numpy.interp 1d linear interpolation

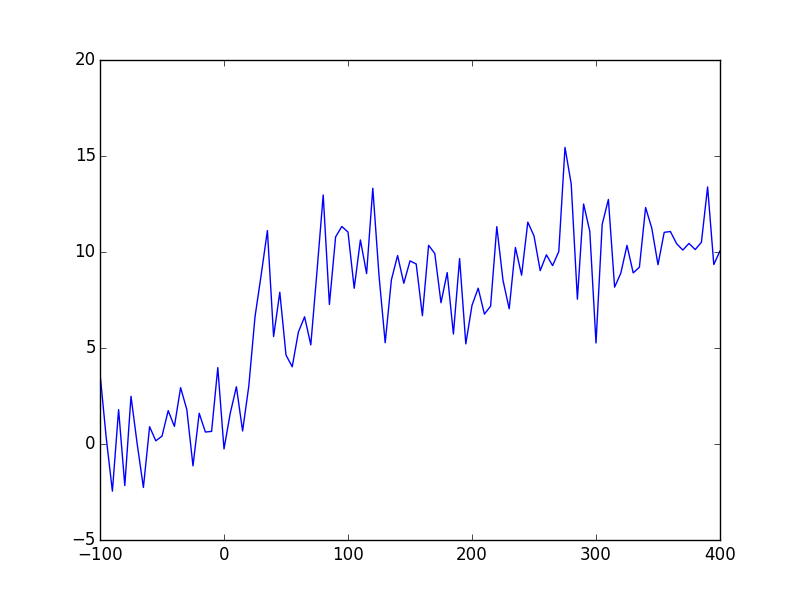

Exercise : Trying some Numpy functions

Let’s practice some of these Numpy functions.

Create a new script (“exercise_numpy_functions.py”), and create a

1d array with the values -100, -95, -90, … , 400 (i.e. start -100,

stop 400, step 5). Call the variable that holds this array taxis as it

corresponds to a time axis.

Now, generate a second, signal array (e.g. sig) from the first, consisting of

two parts:

- a normally distributed noise (zero mean, standard deviation 2) baseline up to the point where taxis is equal to 0.

- Increasing exponential “decay”, i.e. C ( 1 - e^{-kt} ) with the same with the same noise as the negative time component, with C = 10 and k = 1/50.

Lastly combine the two 1d arrays into an 2d array, where each 1d array

forms a column of the 2d array (in the order taxis, then sig).

Save the array to a csv file, (remember to set the delimeter to “,”) in your scripts folder, called “growth_data.csv”.

List the script folder csv files as before to confirm that the file has been created and is in the correct location.

Exercise

You’ve just seen a long list of Numpy functions; let’s try using some of those functions!

Firstly, a range of number can be generated using numpy.arange.

Check the function’s documentation for details of how to generate the desired range.

We’ve already used the numpy.random submodule in the last exercise, so

we should be happy using it again; this time we don’t need to change the

offset, and the desired standard deviation is 2.

The second part of our “signal generation” task is to generate values along the lines of y = f(t), where f = C ( 1 - e^{-kt} ) and the specific values of C and k are given in the question text.

There are several ways that we can combine arrays including numpy.array

to create a new array from the individual arrays, or numpy.vstack (and then

transposing the result using .T).

Answer

# Testing some numpy functions

import numpy as np

import os

# Use the following line to get the script folder full path

# If you'd like to know more about this line, please ask

# a demonstrator

SCRIPT_DIR = os.path.realpath( os.path.dirname( __file__ ))

taxis = np.arange(-100, 401, 5)

sig = 2 * np.random.randn(len(taxis))

# Signal function: 10 (1 - e^{-kt})

sig[ taxis >= 0 ] += 10 * ( 1 - np.exp(-taxis[ taxis >= 0 ] / 50) )

# We need to transpose the array using .T as otherwise the taxis and

# signal data will be in row-form, not column form.

output = np.array([ taxis, sig ]).T

np.savetxt( os.path.join(SCRIPT_DIR, "growth_data.csv"), output, delimiter=",")

# Now lets check the files in the script folder

print("\n".join([f for f in os.listdir(SCRIPT_DIR) if f.endswith("csv")]))

produces

growth_data.csv

data_exercise_reading.csv

random_data.csv

Accessing elements

Array elements can be accessed using the same slicing mechanism as lists; e.g.

if we have a 1d array assigned to arr1 containing [5, 4, 3, 2, 1], then

arr1[4]accesses the 5th element =1arr1[2:]accesses the 3rd element onwards =[3,2,1]arr1[-1]accesses the last element =1arr1[-1::-1]accesses the last element onwards with step -1, =[1,2,3,4,5](i.e. the elements reversed!)

Similarly higher dimensional arrays are accessed using comma-separated slices.

If we have a 2d array;

a = numpy.array([[ 11, 22],

[ 33, 44]])

(which we also represent on one line as [[ 11, 22], [ 33, 44]]), then

a[0]accesses the first row =[11, 22]a[0,0]accesses the first element of the first row =11a[-1,-1]accesses the last element of the last row =44a[:,0]accesses all elements of the first column =[11,33]a[-1::-1]reverses the row order =[[33, 44], [11,22]]a[-1::-1,-1::-1]reverses both row and column order =[[ 44, 33], [ 22, 11]]

The same logic is extended to higher-dimensional arrays.

Using binary arrays (~masks)

In addition to the slice notation, a very useful feature of Numpy is

logical indexing, i.e. using a binary array which has the same shape

as the array in question to access those elements for which the binary array

is True.

Note that the returned elements are always returned as a flattened array. E.g.

arr1 = np.array([[ 33, 55],

[ 77, 99]])

mask2 = np.array([[True, False],

[False, True]])

print( arr1[ mask2 ])

would result in

[33, 99]

being printed to the terminal.

The usefulness of this approach should become apparent in a later exercise!

Exercise : Load and analyze data

Write a new script to

- Load the numpy data you saved in the previous exercise (“growth_data.csv”)

- Print to the console the following statistics for the signal column

- mean

- median

- standard deviation

- shape

- histogrammed information (10 bins)

- Next, select only the signal point for taxis >= 0 and print the same statistics

Exercise

The data can be loaded very similarly to how it was saved!

The required functions are all listed in the function list (some are also member-functions). Check their documentation to determine how to use them.

Numpy also contains a histogram function which bins data and returns bin edge locations and bin populations (~counts).

Answer

# Load an array of number from a file and run some statistics

import numpy as np

import os

# Use the following line to get the script folder full path

# If you'd like to know more about this line, please ask

# a demonstrator

SCRIPT_DIR = os.path.realpath( os.path.dirname( __file__ ))

data = np.loadtxt(os.path.join(SCRIPT_DIR, "growth_data.csv"), delimiter=",")

# We can access the signal column as data[:,1]

sig = data[:,1]

tax = data[:,0]

# Print some stats

print("Full data stats")

print("Mean ", sig.mean())

print("Median ", np.median(sig)) # This one's not a member function!

print("Standard deviation ", sig.std())

print("Shape ", sig.shape)

print("Histogram info: ", np.histogram(sig, bins=10)) # Nor is this!

# Now "select" only the growth portion of the signal,

print("Growth section stats")

growth = sig[ tax >= 0 ]

print("Mean ", growth.mean())

print("Median ", np.median(growth)) # not a member function

print("Standard deviation ", growth.std())

print("Shape ", growth.shape)

print("Histogram info: ", np.histogram(growth, bins=10)) # Nor this

produces

Full data stats

Mean 6.87121549517

Median 8.18040889467

Standard deviation 4.23277585288

Shape (101,)

Histogram info: (array([ 7, 7, 8, 9, 2, 18, 28, 12, 8, 2]), array([ -2.32188019, -0.56242489, 1.19703042, 2.95648572,

4.71594102, 6.47539632, 8.23485162, 9.99430693,

11.75376223, 13.51321753, 15.27267283]))

Growth section stats

Mean 8.40989255375

Median 8.66808213756

Standard deviation 3.11264875624

Shape (81,)

Histogram info: (array([ 2, 0, 2, 7, 2, 18, 28, 12, 8, 2]), array([ -2.32188019, -0.56242489, 1.19703042, 2.95648572,

4.71594102, 6.47539632, 8.23485162, 9.99430693,

11.75376223, 13.51321753, 15.27267283]))

Dealing with NaN and infinite values

Generally speaking, Python handles division by zero as an error; to avoid this

you would usually need to check whether a denominator is non-zero or not using

an if-block to handle the cases separately.

Numpy on the other hand, handles such events in a more sensible manner.

Not a Number

NaNs (numpy.nan) (abbreviation of “Not a Number”) occur when a computation can’t

return a sensible number; for example a Numpy array element 0 when divided

by 0 will give nan.

They can also be used can be used to represent missing data.

NaNs can be handled in two ways in Numpy;

- Find and select only non-NaNs, using

numpy.isnanto identify NaNs - Use a special Nan-version of the Numpy function you want to perform (if it exists!)

For example, if we want to perform a mean or maximum operation, Numpy offers nanmean and

nanmax respectively.

Additional NaN-handling version of argmax, max, min, sum, argmin,

mean, std, and var exist (all by prepending nan to the function name).

When a pre-defined NaN-version of the function you need doesn’t exist, you will need to select only non-NaN values; e.g.

allnumbers = np.array([np.nan, 1, 2, np.nan, np.nan])

# We can get the mean already

print(np.nanmean(allnumbers)) # Prints 1.5 to the terminal

# But a nan version of median doesn't exist, and if we try

print(np.median( allnumbers )) # Prints nan to the terminal

# The solution is to use

validnumbers = allnumbers[ ~ np.isnan(allnumbers) ]

print(np.median( validnumbers )) # Prints 1.5 to the terminal

To Infinity, and beyond…

Similarly, a Numpy array non-zero element divided by 0 gives inf, Numpy’s representation of infinity.

However, Numpy does not have any functions that handle infinity in the same way as the nan-functions (i.e. by essentially ignoring them). Instead, e.g. the mean of an array containing infinity, is infinity (as it should be!). If you want to remove infinities from calculations, then this is done in an analogous way to the NaNs;

allnumbers = np.array([np.inf, 5, 6, np.inf])

print(allnumbers.mean()) # prints inf to the terminal

finite_numbers = allnumbers[ ~np.isinf(allnumbers) ]

print(finite_numbers.mean()) # prints 5.5 to the terminal

Tip:

np.isfinitefor both

np.isfinitewill return an array that containsTrueeverywhere the

input array is neither infinite nor a NaN.

Additional exercise resources

If you would like to further practice Numpy, please visit this resource.

Next steps: Visualization helps!

Now that we have a basic understanding of how to create and manipulate arrays, it’s already starting to become clear that producing numbers alone makes understanding the data difficult.

With that in mind then, let’s start working on visualization, and perform further Numpy analyses there!

Plotting with Matplotlib

In the last section we were introduced to Numpy and the fact that it is a numerical library capable of “basic” numerical analyses on Arrays.

We could use Python to analyse data, and then save the result as comma separated values, which are easily imported into e.g. GraphPad or Excel for plotting.

But why stop using Python there?

Python has some powerful plotting and visualization libraries, that allow us to generate professional looking plots in an automated way.

One of the biggest of these libraries is Matplotlib.

Users of Matlab will find that Matplotlib has a familiar syntax.

For example, to plot a 1d array (e.g. stored in variable arr1d)

as a line plot, we can use

import matplotlib.pyplot as plt

plt.plot(arr1d)

plt.show()

Reminder: aliases

Here we used the alias (

as) feature when importing matplotlib to save having to typematplotlib.pyploteach time we want to access thepyplotsub-module’s plotting functions!

NOTE

In the following notes, most of the time when I refer to “matplotlib’s X

function” (where X is changeable), I’m actually referring to the function

found in matplotlib.pyplot, which is matplotlib’s main plotting

submodule.

The show function

What’s going on here?

The plot function does just that; it generates a

plot of the input arguments.

However, matplotlib doesn’t (by default) show any plots until the show

function is called. This is an intended feature:

- To create multiple plots/figures before pausing the script to show them

- To not show any plots/figures, instead using a plot saving function

- this mode of operation is better suited to non-interactive (batch) processing

It is possible to change this feature so that plots are shown as soon as they

are created, using matplotlib’s ion function (~ interactive on).

Creating new figures with figure

Often we need to show multiple figures; by default calling several plot commands, one after the other, will cause each new plot to draw over previous plots.

To create a new figure for plotting, call

plt.figure()

# Now we have a new figure that will receive the next plotting command

A short sample of plot types

Matplotlib is good at performing 2d plotting.

As well as the “basic” plot command, matplotlib.pyplot includes

bar : Bar plot (also barh for horizontal bars)

barb : 2d field of barbs (used in meteorology)

boxplot : Box and whisker plot (with median, quartiles)

contour : Contour plot of 2d data (also contourf - filled version)

errorbar : Error bar plot

fill_between : Fills between lower and upper lines

hist : Histogram (also hist2d for 2 dimensional histogram)

imshow : Image "plot" (with variety of colour maps for grayscale data)

loglog : Log-log plot

pie : Pie chart

polar : Polar plot

quiver : Plot a 2d field of arrows (vectors)

scatter : Scatter plot of x-y data

violinplot : Violin plot - similar to boxplot but width indicates distribution

Rather than copying and pasting content, navigate to the Matplotlib Gallery page to view examples of these and more plots.

You’ll find examples (including source code!) for most of your plotting needs there.

Exercise : Simple plotting

First of all, let’s practice plotting 1d and 2d data using some of the plotting functions mentioned above.

Create a new script file (“exercise_mpl_simple.py”), and start off by loading and creating some sample data sets:

- Load the data you generated at the end of the last section (“growth_data.csv”)

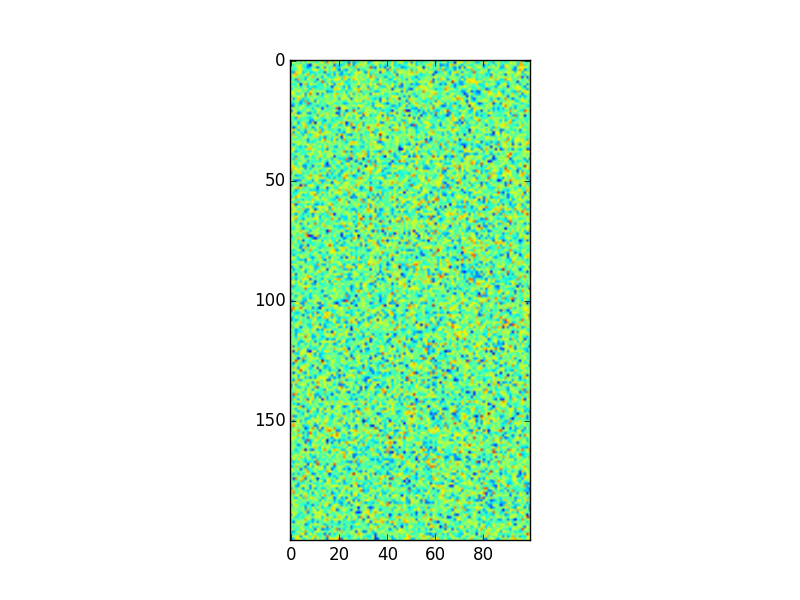

- Create a 2d array of random (you can pick which distribution) noise, of size 200 x 100.

Create the following plots and display them to the screen

- A 1d line plot of the first column of the growth data (~t) vs the signal

- A histogram of the t >= 0 part of the signal data (i.e. a graphical version

of the binning statistics you printed to the terminal - use

matplotlib’shistfunction. - An image plot (i.e. an image) of the 2d data

Exercise

Loading and generating Numpy arrays is very similar to the exericises in the last section.

All that’s really being added here are some very simple plot functions,

namely plot, hist, and imshow.

For this first example you will either need to call matplotlib’s show

function after each plot is created, or you could create a new figure

for each plot using the figure function, and call show only once

at the end of the script.

Answer

# Practice of basic matplotlib plotting commands

import numpy as np

import matplotlib

matplotlib.use("agg")

import matplotlib.pyplot as plt

import os

ROOT = os.path.realpath(os.path.dirname(__file__))

# Load and generate arrays

arr1 = np.loadtxt(os.path.join(ROOT, "growth_data.csv"), delimiter=",")

arr2 = 3.14 * np.random.randn(200,100) + 66

# Do plotting

# NOTE: Instead of the `savefig` lines, your code should be calling `plt.show()`!

plt.plot(arr1[:,0], arr1[:,1])

plt.savefig("answer_mpl_simple1.png"); plt.clf()

plt.hist(arr1[:,1], bins=10)

plt.savefig("answer_mpl_simple2.png"); plt.clf()

plt.imshow(arr2)

plt.savefig("answer_mpl_simple3.png"); plt.clf()

This should produce the following plots:

Customizing the figure

Most plotting functions allow you to specify additional keyword arguments, which determine the plot characteristics.

For example, a the line plot example may be customized to have a red dashed line and square marker symbols by updating our first code snippet to

plt.plot(arr1d, '--', color="r", marker="s")

(or the shorthand plt.plot(arr1d, "--r"))

Axis labels, legends, etc

Additional figure and axes properties can also be modified. To do so, we need to access figure/axis respectively.

To create a new (empty) figure and corresponding figure object,

use the figure function:

fig1 = plt.figure()

# Now we can modify the figure properties, e.g.

# we can set the figure's width (in inches - intended for printing!)

fig1.set_figwidth(10)

# Then setting the figure's dpi (dots-per-inch) will determine the

# number of pixels this corresponds to...

fig1.set_dpi(300) # I.e. the figure width will be 3000 px!

If instead we wish to modify an already created figure object,

we can either get a figure object by passing it’s number propery

to the figure function e.g.

f = plt.figure(10) # Get figure number 10

or more commonly, we get the active figure (usually the last created figure) using

f = plt.gcf()

Axes are handled in a similar way;

ax = plt.axes()

creates a default axes in the current figure (creates a figure if none are present), while

ax = plt.gca()

gets the current (last created) axes object.

Axes objects give you access to changing background colours, axis colours, and many other axes properties.

Some of the most common ones can be modified for the current axes without

needing to access the axes object as matplotlib.pyplot has convenience

functions for this e.g.

plt.title("Aaaaarghh") # Sets current axes title to "Aaaaarghh"

plt.xlabel("Time (s)") # Sets current axes xlabel to "Time (s)"

plt.ylabel("Amplitude (arb.)") # Sets current axes ylabel to "Amplitude (arb.)"

# Set the current axes x-tick locations and labels

plt.yticks( numpy.arange(5), ('Tom', 'Dick', 'Harry', 'Sally', 'Sue') )

A note on style

Matplotlib’s default plotting styles are often not considered to be desirable, with critics citing styles such as the R package ggplot’s default style as much more “professional looking”.

Fortunately, this criticism has not fallen on deaf ears, and while it has always been possible to create your own customized style, Matplotlib now includes additional styles by default as “style sheets”.

In particular, styles such as ggplot are very popular and essentially emulate ggplot’s style (axes colourings, fonts, etc).

The style may be changed before starting to plot using e.g.

plt.style.use('ggplot')

A list of available styles may be accessed using

plt.style.available

(on the current machine this holds : ['dark_background',

'fivethirtyeight', 'bmh', 'grayscale', 'ggplot'])

You may define your own style file, and after placing it in a specific folder ( “~/.config/matplotlib/mpl_configdir/stylelib” ) you may access your style in the same way.

Styles may also be composed together using

plt.style.use(['ggplot', 'presentation'])

where the rules in the right-most style will take precedent.

Exercise : trying a new style

Repeat the simple plotting exercise with the "ggplot" style;

to do this, copy and paste the code from the simple plotting exercise

but add the code to change the style to the ggplot style

before the rest of the code.

Answer

To change the style to ggplot, simply add

plt.style.use('ggplot')

after having imported matplotlib.pyplot (as plt).

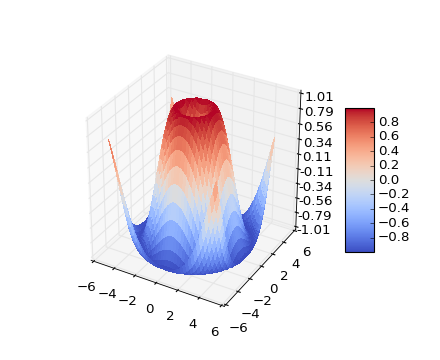

3d plotting

While matplotlib includes basic 3d plotting functionality (via mplot3d),

we recommend using a different library if you need to generate

“fancy” looking 3d plots.

A good alternative for such plots isa package called mayavi.

However this is not included with WinPython and the simplest route to installing it involves installing the Enthough Tool Suite (http://code.enthought.com/projects/).

For the meantime though, if you want to plot 3d data, stick with the mplot3d

submodule.

For example, to generate a 3d surface plot of the 2d data (i.e. the “pixel” value would correspond to the height in z), we could use

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.plot_surface(X, Y, Z)

where X, Y, Z are 2d data values.

X and Y are 2d representations of the axis values which can be

generated using utility functions, e.g. pulling an example from the

Matplotlib website,

from mpl_toolkits.mplot3d import Axes3D

from matplotlib import cm

from matplotlib.ticker import LinearLocator, FormatStrFormatter

import matplotlib.pyplot as plt

import numpy as np

fig = plt.figure()

ax = fig.gca(projection='3d')

X = np.arange(-5, 5, 0.25)

Y = np.arange(-5, 5, 0.25)

X, Y = np.meshgrid(X, Y)

R = np.sqrt(X**2 + Y**2)

Z = np.sin(R)

surf = ax.plot_surface(X, Y, Z, rstride=1, cstride=1, cmap=cm.coolwarm,

linewidth=0, antialiased=False)

ax.set_zlim(-1.01, 1.01)

ax.zaxis.set_major_locator(LinearLocator(10))

ax.zaxis.set_major_formatter(FormatStrFormatter('%.02f'))

fig.colorbar(surf, shrink=0.5, aspect=5)

plt.show()

Saving plots

Now that we have an idea of the types of plots that can be generated using Matplotlib, let’s think about saving those plots.

If you call the show command, the resulting figure window includes

a basic toolbar for zooming, panning, and saving the plot.

However, as a Pythonista, you will want to automate saving the plot (and probably forego viewing the figures until the script has batch processed your 1,000 data files!).

The command to save the currently active figure is

plt.savefig('filename.png')

Alternatively, if you store the result of the

plt.figure() function in a variable as mentioned above

you can subsequently use the savefig member-function

of that figure object to specifically save that figure, even if

it is no longer the active figure; e.g.

fig1 = plt.figure()

#

# Other code that generates more figures

#

# ...

#

fig1.savefig('figure1.pdf')

Note on formats: Raster vs Vector

The filename extension that you specify in the savefig function

determines the output type; matplotlib supports

- png - good for images!

- jpg (not recommended except for drafts!)

- pdf - good for (vector) line plots

- svg

as well as several others.

The first two formats are known as raster formats, which means that the entire figure is saved as a bunch of pixels.

This means that if you open the resulting file with an image viewer, and zoom in, eventually the plot will look pixelated/blocky.

The second two formats are vector formats, which means that a line plot is saved as a collection of x,y points specifying the line start and end points, text is saved as a location and text data and so on.

If you open a vector format file with e.g. a PDF file viewer, and zoom in, the lines and text will always have smooth edges and never look blocky.

As a general guideline, you should choose to mainly use vector file (pdf, eps, or svg) output for plots as they will preserve the full data, while raster formats will convert the plot data into pixels.

In addition, if you have vector image editing software like Inkscape or Adobe Illustrator, you will be able to open the vector image and much more easily change line colours, fonts etc.

Other annotations

We already saw how to add a title and x and y labels; below are some more examples of annotations that can be added.

You could probably guess most of what the functions do (or have guessed what the function would be called!):

plt.title("I'm a title") # Note single quote inside double quoted string is ok!

plt.xlabel("Time (${\mu}s$)") # We can include LaTex for symbols etc...

plt.ylabel("Intensity (arb. units)")

plt.legend("Series 1", "Series 2")

plt.colorbar() # Show a colour-bar (usually for image data).

# Add in an arrow

plt.arrow(0, 0, 0.5, 0.5, head_width=0.05, head_length=0.1, fc='k', ec='k')

The last command illustrates how as with most

most plotting commands, arrow accepts a

large number of additional keyword arguments (aka kwargs) to

customize colours (edge and face), widths, and many more properties.

Exercise : comparing raster and vector formats

Create a new script file (exercise_mpl_raster_vs_vector.py) and create

a simple line plot of y=x^2 between x=-10 and x=10.

Add in labels for the x and y axis; call the x axis, "x (unitless)" and the

y axis “y = x^2” (see if you can figure out how to render the squared symbol, i.e.

a super-script 2; those of you familiar with LaTex should find this easy!).

Save the plot as both a png and pdf file, and open both side-by-side. Zoom in to compare the two formats.

Answer

To generate the x and y data use Numpy’s linspace function for x, and the generate the y data from

the x data.

To render mathematical symbols, use the latex expression: “$x^2$” - if you’re not familiar with LaTex and don’t care about being able to render mathematical expressions and symbols, don’t worry about this!

Otherwise, you might want to read up about mathematical expressions in LaTex.

Use the plt.savefig function to save the figure twice, once with file extension (the end of the

filename) png, and once with extension pdf.

Sub-plots

There are two common approaches to creating sub-plots.

The first is to create individual figures with well thought out sizes (especially font sizes!), and then combine then using an external application.

Matplotlib can also simplify this step for us, by providing subplot functionality. We could, when creating axes, reposition them ourselves in the figure. But this would be tedious, so Matplotlib offers convenience functions to automatically lay out axes for us.

The older of these is the subplot command, which lays axes out in a regular

grid.

For example

plt.subplot(221) # Create an axes object with position and size for 2x2 grid

plt.plot([1,2,3])

plt.title("Axes 1")

plt.subplot(222)

plt.plot([1,2,3])

plt.title("Axes 2")

plt.subplot(223)

plt.plot([1,2,3])

plt.title("Axes 3")

plt.subplot(224)

plt.plot([1,2,3])

plt.title("Axes 4")

Note that the subplot command uses 1-indexing of axes (not 0-indexing!).

The above could be simplified (at least in some scenarios) using a for-loop,

e.g.

# datasets contains 4 items, each corresponding to a 1d dataset...

for i, data in enumerate(datasets):

plt.subplot(2,2,i+1)

plt.plot(data)

plt.title("Data %d"%(i+1))

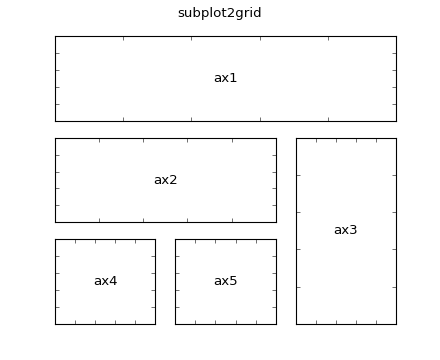

Recently, Matplotlib added a new way of generating subplots, which makes it easier to generate non-uniform grids.

This is the subplot2grid function, which is used as follows,

ax1 = plt.subplot2grid((3,3), (0,0), colspan=3)

ax2 = plt.subplot2grid((3,3), (1,0), colspan=2)

ax3 = plt.subplot2grid((3,3), (1, 2), rowspan=2)

ax4 = plt.subplot2grid((3,3), (2, 0))

ax5 = plt.subplot2grid((3,3), (2, 1))

would generate

(without the labels!)

(without the labels!)

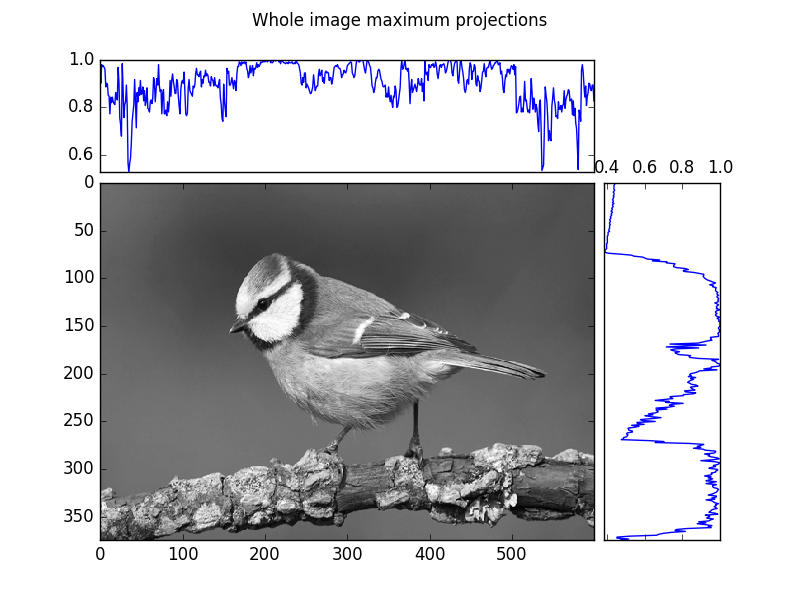

Exercise : Adding subplots and insets

Download the image from here: Image File.

Create a new script (“exercise_mpl_gridplots.py”), and

- Load the image using

matplotlib.pyplot’simreadconvenience function - The image may be loaded into a 3d array - RGB(A) - if so, convert it to grayscale my taking the mean of the first 3 colour channels

- Create a grid of 3 plots, with the following shape

2x6

---------

| c 8x2 |

------------

| || |

| b ||a|

| 8x6 || |

| || |

------------

Here,

- a is a maximum projection onto the y axis (2x8 panel)

- b is the full grayscale image (8x6 panel)

- c is a maximum projection onto the x axis (8x2 panel)

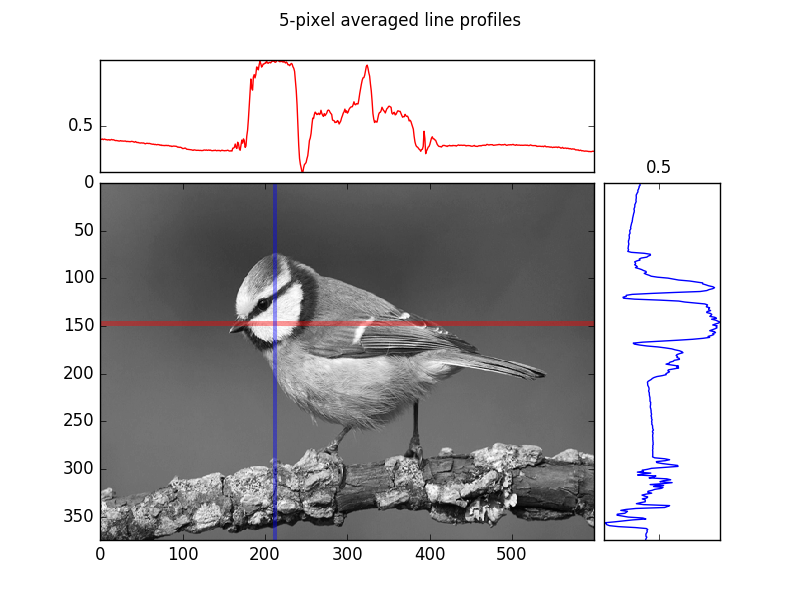

Next create a similar subplot figure but this time, instead of performing maximum projections along the x and y axes, generate the following mean line-profiles:

- A profile along a horizontal line at y=147 averaged over a of width 5 pixels (i.e. averaging from y=145 to 149 inclusive)

- A profile along a vertical line at x=212 averaged over a width of 5 pixels (i.e.

Exercise

The main thing we’re practicing here is the use of the subplottling functionalities in Matplotlib.

Using subplot2grid will make this much easier than trying to

manually position axes in the figure.

Maximum projections and line profile

A maximum projection means for the given dimension (e.g. row or column), extract the maximum value of the image (array) each for row or column.

A basic line profile means given a line (in our case horizontal and vertical lines, which correspond to rows and columns of the array respectively), simply pull out those values and plot them as a 1d plot.

An often more robust version of the simple line profile, is a line profile of finite (> 1 pixel) width that is averaged at each point along the line across the pixels along the width. Put another way, visualize first of all a rectangle instead of a line, and perform a mean-projection of the rectangular (2d) array of values. The resulting 1d array of values is the width-meaned line profile.

If you’d like more information about generating the maximum projections or the line profiles, ask a demonstrator.

Answer

# Create a subplot figure

# NOTE: You shouldn't need the following 2 lines, yet, but they're useful for

# "headless" plotting (i.e. on machines with no display)

import matplotlib

matplotlib.use('agg')

import matplotlib.pyplot as plt

import numpy as np

# "Usual" script folder extraction method (as used in many previous exercises!)

import os

SCRIPT_DIR = os.path.realpath(os.path.dirname(__file__))

# Load the data

im = plt.imread(os.path.join(SCRIPT_DIR, "grayscale.png"))

# Take the mean of the RGB channels (dims are Width, Height, Colour Channels)

# (up to 3 as "Alpha" aka opacity channel also included at index 3)

im = im[...,:3].mean(axis=-1)

# Maximum projections using the Numpy Array max member-function with

# axis keyword argument to select the dimension to "project" along.

maxx = im.max(axis=0)

maxy = im.max(axis=1)#[-1::-1]

def plot_im_and_profiles(im, palongx, palongy, cx = "b", cy = "b"):

"""

Use a function to do the plotting as we do essentially the same thing

more than once, ie generate an image plot with the line-profile plots

above and to the right of the image plot

"""

# Create 3 axes with specified grid dimensions

ax_a = plt.subplot2grid((8,10), (2,8), colspan=2, rowspan=6)

ax_a.plot(palongy, np.arange(len(palongy)), color=cy)

ax_a.locator_params(tight=True, nbins=4)

ax_a.xaxis.set_tick_params(labeltop="on", labelbottom='off')

ax_a.yaxis.set_visible(False)

ax_b = plt.subplot2grid((8,10), (2,0), colspan=8, rowspan=6)

ax_b.imshow(im, cmap='gray')

plt.axis('tight')# ax_b.

ax_c = plt.subplot2grid((8,10), (0,0), colspan=8, rowspan=2)

ax_c.plot(palongx, color=cx)

ax_c.locator_params(tight=True, nbins=4)

ax_c.xaxis.set_visible(False)

# Return the axes objects so we can easily add things to them

# in our case we want to add an indication of where the line

# profiles are taken from

return ax_a, ax_b, ax_c

plot_im_and_profiles(im,maxx, maxy[-1::-1])

# Suptitle gives a whole figure a title

# (as opposed to individual axes

plt.suptitle("Whole image maximum projections")

plt.savefig(os.path.join(SCRIPT_DIR, "answer_mpl_subplot1.png"))

# Now add in a "line-profile", width 5 and averaged

# at y ~ 145 and x ~ 210

profx = im[145:150, :].mean(axis=0)

profy = im[:, 210:215].mean(axis=1)[-1::-1]

ax_a, ax_b, ax_c = plot_im_and_profiles(im,profx, profy, cx="r", cy="b")

# Show the line profile regions using `axvspan` and `axhspan`

ax_b.axhspan(145,150, fc = "r", ec="none", alpha=0.4)

ax_b.axvspan(210,215, fc = "b", ec="none", alpha=0.4)

plt.suptitle("5-pixel averaged line profiles")

plt.savefig(os.path.join(SCRIPT_DIR, "answer_mpl_subplot2.png"))

This generates the following two figures:

Additional exercise resources

If you would like to further practice Matplotlib, please visit this resource.

Scipy : Additional scientific computing modules

Numpy is one of the core modules of the Scipy “ecosystem”;

however, additional numerical

routines are packages in the separate scipy module.

The sub-modules contained in scipy include:

scipy.cluster: Clustering and cluster analysisscipy.fftpack: Fast fourier transform functionsscipy.integrate: Integration and ODE (Ordinary Differential Equations)scipy.interpolate: Numerical interpolation including splines and radial basis function interpolationscipy.io: Input/Output functions for e.g. Matlab (.mat) files, wav (sound) files, and netcdf.scipy.linalg: Linear algebra functions like eigenvalue analysis, decompositoins, and matrix equation solversscipy.ndimage: Image processing functions (works on high dimensional images - hence nd!)scipy.optimize: Optimization (minimize, maximize a function wrt variables), curve fitting, and root findingscipy.signal: Signal processing (1d) including convolution, filtering and filter design, and spectral analysisscipy.sparse: Sparse matrix support (2d)scipy.spatial: Spatial analysis related functionsscipy.statistical: Statistical analysis including continuous and discreet distributions and a slew of statistical functions

While we don’t have time to cover all of these submodules in detail, we will give a introduction to their general usage with a couple of exercise and then a sample project using a few of them to perform some 1d signal processing.

Exercise : Curve fitting

Curve fitting represents a relatively common research task, and fortunatley Python includes some great curve fitting functionalities.

Some of the more advanced ones are present in additional Scikit libraries,

but Scipy includes some of the most common algorithms in the optimize

submodule, as well as the odr submodule, which covers the more elaborate

“orthogonal distance regression” (as opposed to ordinary least squares

used in curve_fit and leastsq).

Load the data contained in the following file

and use the scipy.optimize.curve_fit or scipy.optimize.leastsq (least-squares)

fitting function (curve_fit calls leastsq but doesn’t give as much output!)

to generate a line of best fit for the data.

You shold evaluate several possible functions for the fit including

- A straight line

f(x,a,b) = a*x + b - A quadratic fit

f(x,a,b,c) = a*x^2 + b*x + c - An exponential fit

f(x,a,b,c) = a*e^(b*x) + c

Generate an estimate of the goodness of fit to discriminate between these possible models.

Bonus section

Try the same again with the following data (you should be able to just change the filename!)

You should find that the goodness of fit has become worse. In fact the algorithm used

by Scipy’s optimize submodule is not always able to determine which of the models fits best.

Try using the scipy.odr submodule instead; a guide for how to do this can be found

here

Exercise

General tips:

- Use a function for the fitting; this will allow you to call the same fitting proceedures on multiple files easily!

- Create a dictionary of fitting functions, with their name as the key and the function

as values (see the additional advanced Python section to learn about the

lambdas used here, or stick with defining the functions the usual way, withdef). - Loop over the fitting functions to perform the same operations with each one.

Answer

import numpy as np

import scipy.optimize as scopt

def perform_fitting(filename):

funcs = {

'linear' : lambda x,a,b,c : a*x + b,

'quadratic' : lambda x,a,b,c : a*x*x + b*x + c,

'exponential' : lambda x,a,b,c : a*np.exp(b*x) + c,

}

data = np.fromfile(filename)

Npoints = len(data)

taxis = np.arange(Npoints)

for fname in funcs:

popt, pcov = scopt.curve_fit( funcs[fname], taxis, data)

yexact = funcs[fname](taxis, *popt)

residuals = data - yexact

ss_err = (residuals**2).sum()

ss_tot = ((data-data.mean())**2).sum()

rsquared = 1-(ss_err/ss_tot)

print("\n\n\nFitting: %s"%fname)

print("\nCurve fit")

print("popt:", popt)

print("pcov:", pcov)

print("rsquared:", rsquared)

if __name__ == "__main__":

perform_fitting("curve_fitting_data_simple.txt")

Output

The first part of the quesrtion generates the output below (the last line represents the R^2 goodness of fit statistic for each fit)

Fitting: quadratic

Curve fit

popt: [ 0.27742012 0.43227489 24.42625192]

pcov: [[ 1.24062405e-04 -2.35718583e-03 7.07155749e-03]

[ -2.35718583e-03 4.80617819e-02 -1.65474460e-01]

[ 7.07155749e-03 -1.65474460e-01 8.07571966e-01]]

rsquared: 0.99839145357

Fitting: linear

Curve fit

popt: [ 5.70325725 8.61330482 1. ]

pcov: inf

rsquared: 0.939693857665

Fitting: exponential

Curve fit

popt: [ 19.98312703 0.10003282 2.07108578]

pcov: [[ 2.35952209e-03 -5.30270860e-06 -3.10538435e-03]

[ -5.30270860e-06 1.20524441e-08 6.87521073e-06]

[ -3.10538435e-03 6.87521073e-06 4.24287754e-03]]

rsquared: 0.999998872202

We see from this that for non-noisy data using curve_fit performs relatively

well (i.e. the data was indeed exponential!).

Bonus section

If we run the same function on the file “curve_fitting_data_noisy.txt”, we get

Fitting: quadratic

Curve fit

popt: [ 0.36402064 1.39338512 33.74952291]

pcov: [[ 1.16491630e-02 -2.21334100e-01 6.64002296e-01]

[ -2.21334100e-01 4.51288594e+00 -1.55376545e+01]

[ 6.64002296e-01 -1.55376545e+01 7.58290698e+01]]

rsquared: 0.932781903212

Fitting: linear

Curve fit

popt: [ 8.30977729 13.0003464 1. ]

pcov: inf

rsquared: 0.887804486031

Fitting: exponential

Curve fit

popt: [ 37.66928574 0.08831645 -6.97576507]

pcov: [[ 5.63753689e+02 -6.41775284e-01 -6.99939382e+02]

[ -6.41775284e-01 7.38443618e-04 7.87263594e-01]

[ -6.99939382e+02 7.87263594e-01 8.90820497e+02]]

rsquared: 0.933020625779

i.e. as far as curve_fit is concerned, both quadratic and exponential are relatively good fits,

and the one that “wins out” will vary depending on the noise present.

However, if we add in the additional odrpack version of the stats

by adding

import scipy.odr as scodr

by the import statements, and the following to the loop over functions:

...

print("\nODRPACK VERSION")

funcnow = lambda params, x: funcs[fname](x, *params)

mod = scodr.Model(funcnow)

dat = scodr.Data(taxis,data)

odr = scodr.ODR(dat, mod, beta0 = [1.0, 1.0, 0.0])

out = odr.run()

out.pprint()

we also produce

Fitting: quadratic

...

ODRPACK VERSION

Beta: [ 0.55201606 -0.63943926 31.30145991]

Beta Std Error: [ 0.08980006 1.10407977 1.93744129]

Beta Covariance: [[ 0.00241881 -0.0263813 0.01923865]

[-0.0263813 0.36563691 -0.35584366]

[ 0.01923865 -0.35584366 1.12591663]]

Residual Variance: 3.333886943328627

Inverse Condition #: 0.0020568238964839263

Reason(s) for Halting:

Iteration limit reached

Fitting: linear

...

ODRPACK VERSION

Beta: [ 9.34657515 3.1507637 0. ]

Beta Std Error: [ 0.78204438 8.56164287 0. ]

Beta Covariance: [[ 0.14923625 -1.41774486 0. ]

[ -1.41774486 17.8865157 0. ]

[ 0. 0. 0. ]]

Residual Variance: 4.098155829657788

Inverse Condition #: 0.0437541938307872

Reason(s) for Halting:

Problem is not full rank at solution

Sum of squares convergence

Fitting: exponential

...

ODRPACK VERSION

Beta: [ 43.9540789 0.08427052 -17.18528396]

Beta Std Error: [ 2.68421883e+01 2.80794795e-02 3.04612945e+01]

Beta Covariance: [[ 2.69641542e+02 -2.79919119e-01 -3.04410441e+02]

[ -2.79919119e-01 2.95072729e-04 3.13666736e-01]

[ -3.04410441e+02 3.13666736e-01 3.47254336e+02]]

Residual Variance: 2.672077403678681

Inverse Condition #: 8.544672221858744e-06

Reason(s) for Halting:

Sum of squares convergence

i.e. we see that the residual variance and inverse condition are significantly lower for the exponential fitting (and these values are also more robust to noise).

Exercise : Interpolating data

Another common use of Scipy is signal interpolation.

Background : Interpolation

Interpolation is often used to resample data, generating data points at a finer spacing than the original data.

A simple example of interpolation is image resizing; when a small image is resized to a larger size, the “in-between” pixel values are generated by interpolating their values based on the nearest original pixel values.

Typical interpolation schemes include nearest, linear, and spline interpolation of various degrees. These terms denote the functional form of the interpolating function.

- nearest neighbor interpolation assigns each interpolated value the value of the nearest original value

- linear interpolation assigns each interpolated value the linearly weighted combination of the neighbouring values; e.g. if the value to be interpolated is 9 times closer to original point B than original point A, then the final interpolated value would be 0.1A + 0.9B.

- Spline interpolation approximates the region between the original data points as quadratic or cubic splines (depending on which spline interpolation order) was selected.

Download the data from here:

and use “linear” interpolation to interpolate the data to increase so that the new “x axis” has five times as many points between then original limits (i.e. you’ll resample the data to 5 times the density).

Bonus

Run the interpolation with “quadratic” and “cubic” interpolation also (it helps if your interpolation code was in a function!), and you should see much better results!

Exercise

Use the Numpy load function to load the data (as it was created with save!).

If you plot the data ( data[0] vs data[1] ), you should see a jagged sine curve.

Use the linspace function to create your new, denser x axis data.

After interpolation, you should end up with a slightly smoother sine curve.

Answer

import scipy.interpolate as scint

import numpy as np

import matplotlib.pyplot as plt # For plotting

def interp_data(data, kind="quadratic"):

plt.plot(data[0], data[1])